Google doesn't have the best track record when it comes to image generation AI.

In February, an image generator built into Google's AI-powered chatbot Gemini randomly inserted gender and racial diversity into prompts about people, resulting in offensive inaccuracies. Among other things, it was found that images of racially diverse Nazis were being produced.

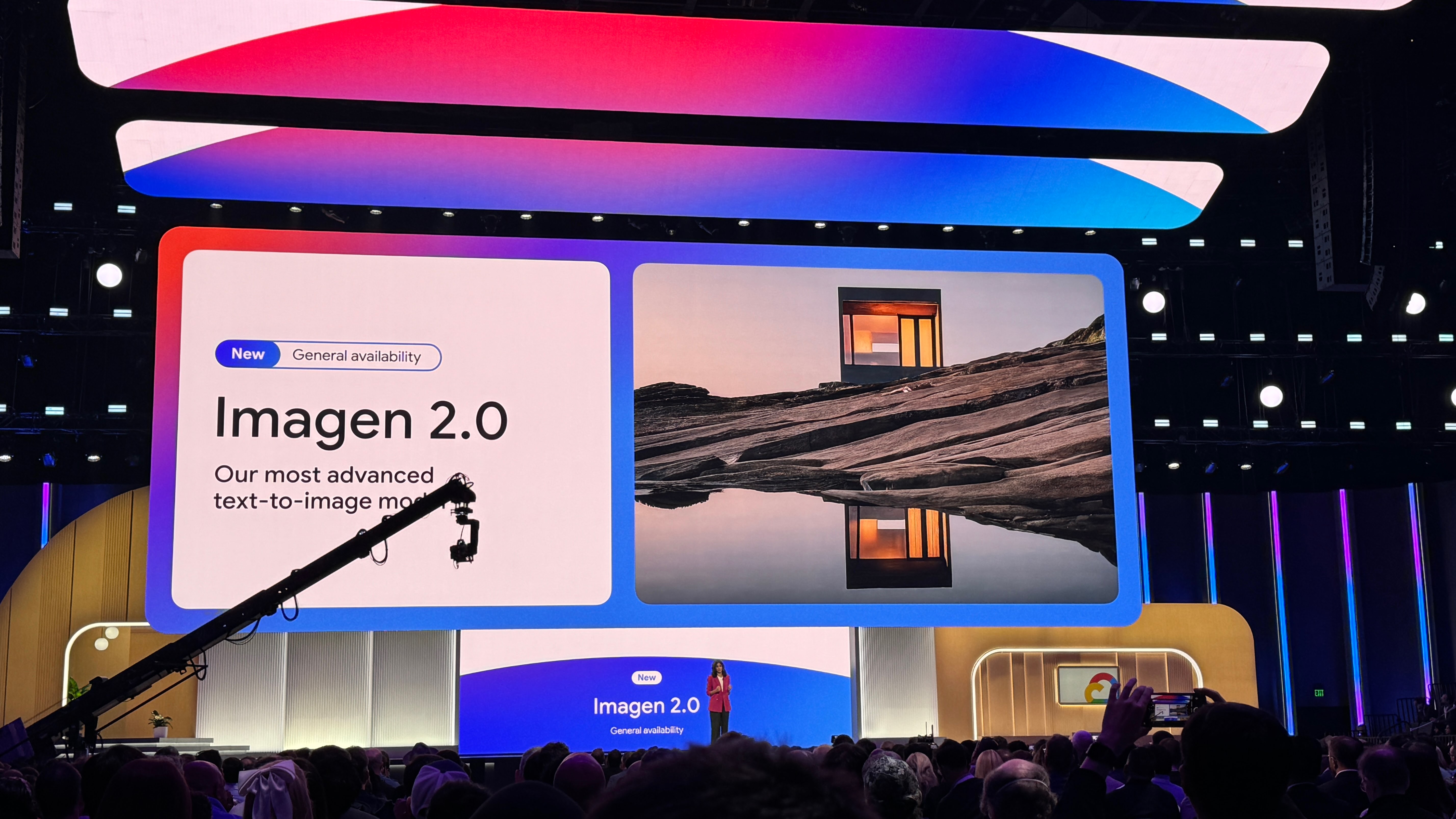

Google has vowed to retire the generator, improve it and eventually re-release it. While we wait for its return, the company will release Imagen 2, an enhanced image generation tool within his Vertex AI developer platform. However, it is clearly an enterprise tool. Google announced Imagen 2 at its annual Cloud Next conference in Las Vegas.

Image credit: Frederic Lardinois/TechCrunch

Imagen 2 (actually a family of models, launched in December 2023 after being previewed at Google's I/O conference in May 2023), like OpenAI's DALL-E and Midjourney, Create and edit images based on prompts. Of interest to businesses, Imagen 2 can render text, emblems, and logos in multiple languages and optionally overlay those elements onto existing images (e.g., business cards, apparel, products, etc.).

After initially being released in preview, image editing with Imagen 2 is now generally available in Vertex AI with two new features: InPaint and Outpaint. In-painting and out-painting are features that other popular image generators such as DALL-E have offered for some time, allowing you to remove unwanted parts of an image, add new components, and create image borders. can be used to extend the field of view to create a wider field of view.

But the real part of the Imagen 2 upgrade is what Google calls “text to live images.”

Imagen 2 now lets you create short 4-second videos from text prompts, similar to AI-powered clip generation tools like Runway, Pika, and Irreverent Labs. True to Imagen 2's corporate focus, Google is touting live images as a tool for marketers and creatives, such as his GIF generator for ads displaying nature, food, and animals, which Imagen 2 is the subject matter that has been fine-tuned.

Google says live images can capture “various camera angles and movements” while “supporting consistency across sequences.” However, the resolution is currently lower, at 360 pixels by 640 pixels. Google promises that this will be improved in the future.

To allay (or at least attempt to allay) concerns about the potential for deepfakes to be created, Google is adopting SynthID, an approach developed by Google DeepMind, in Imagen 2 to add invisible cryptographic watermarks to live images. states that it applies to Of course, detecting these watermarks (which Google claims are resistant to editing such as compression, filters, and color adjustments) requires tools provided by Google, which are not available to third parties.

And, no doubt hoping to avoid another generated media controversy, Google insists that live image generation is “filtered for safety.” A spokesperson told TechCrunch in an email: “Vertex AI's Imagen 2 models are not experiencing the same issues as the Gemini app. We continue to conduct extensive testing and continue to engage with our customers.”

Image credit: Frederic Lardinois/TechCrunch

But if we generously assume for a moment that Google's watermarking technology, bias reduction, and filters are as effective as claimed, can Live Images compete with the video generation tools already out there?

not much.

Runway can produce 18 second clips at a higher resolution. Stability AI's video clip tool, Stable Video Diffusion, offers great customization (in terms of frame rate). And OpenAI's Sora (not yet commercially available, of course) seems poised to blow away the competition with the photorealism it can achieve.

So what are the actual technical benefits of live images? I'm not sure. And I don't think I'm being too harsh.

After all, Google is behind some truly impressive video generation technologies like Imagen Video and Phenaki. Phenaki is one of Google's more interesting experiments in text-to-video, turning long, detailed prompts into “movies” lasting more than two minutes. However, the clips are low resolution, low frame rate, and only moderately consistent.

Given how the generative AI revolution caught Google CEO Sundar Pichai by surprise and recent reports suggesting the company is still struggling to keep pace with its rivals, No wonder the product feels like a failure. But it's still disappointing. I can't help but wonder if Google's Skunkworks has, or could have, a better product lurking.

Models like Imagen are typically trained on large numbers of samples from public sites and datasets on the web. Many generative AI vendors view training data as a competitive advantage, so they hold the data and associated information close to their chests. But the details of training data are also a potential source of IP-related litigation, providing another motivation for revealing more.

As I always do with announcements about generative AI models, I would like to share with you the data that was used to train the updated Imagen 2, as well as creators whose work may have been caught up in the model training process. asked if I could opt out. At some point in the future.

Google told me only that its models are trained “primarily” on public web data extracted from “blog posts, media transcripts, and public conversation forums.” Which blog, transcript, forum? It's anyone's guess.

The spokesperson pointed to Google's Web Publisher Controls, which allow webmasters to prevent the company from collecting data from websites, including photos and artwork. However, Google will not commit to releasing an opt-out tool or compensating creators for their (unintentional) contributions in return. This is a step taken by many of its competitors, including OpenAI, Stability AI, and Adobe.

Another point worth mentioning: Text-to-Live images are not covered by Google's Generated AI Indemnification Policy. This policy protects Vertex AI customers from claims of copyright infringement related to Google's use of training data and output of generated AI models. This is because Text-to-Live images are technically in preview stage. This policy only applies to Generated AI products that are generally available (GA).

Backflow, or generative models spitting out mirror copies of the examples (such as images) on which they were trained, is a legitimate concern for enterprise customers. Unofficial and academic research suggests that the original Imagen was not immune to this, spitting out recognizable photos of people, artists' copyrighted works, etc. when prompted in certain ways. Shown.

Barring any controversy, technical issues, or other unexpected major setbacks, Text-to-Live images will enter GA at some point. But when it comes to live images today, Google is basically saying, “Use at your own risk.”