Adobe said it is building an AI model to generate the video. However, it has not been revealed exactly when this model will be released. Also, little else has been revealed other than the fact that this model exists.

Offered as a sort of answer to the models of OpenAI's Sora, Google's Imagen 2, and a growing number of startups in the early generative AI video space, Adobe's model is part of the company's expanding Firefly family of generative AI products. , Adobe's flagship video editing suite, Premiere Pro, will be released later this year, Adobe says.

Like many current generative AI video tools, Adobe's model creates footage (prompts or reference images) from scratch. It also powers Premiere Pro's three new features: Add Object, Delete Object, and Generate Extensions.

They are very self-explanatory.

Adding an object allows users to select a segment of a video clip (for example, the top third, or the bottom left corner) and enter a prompt to insert an object within that segment. In a press conference with TechCrunch, an Adobe spokesperson showed a still image of a real-world briefcase filled with diamonds generated by an Adobe model.

AI-generated diamonds, provided by Adobe.

Object Removal removes objects in the background of your shot, such as a boom mic or coffee cup, from your clip.

Delete objects with AI. Please note that the results are not complete.

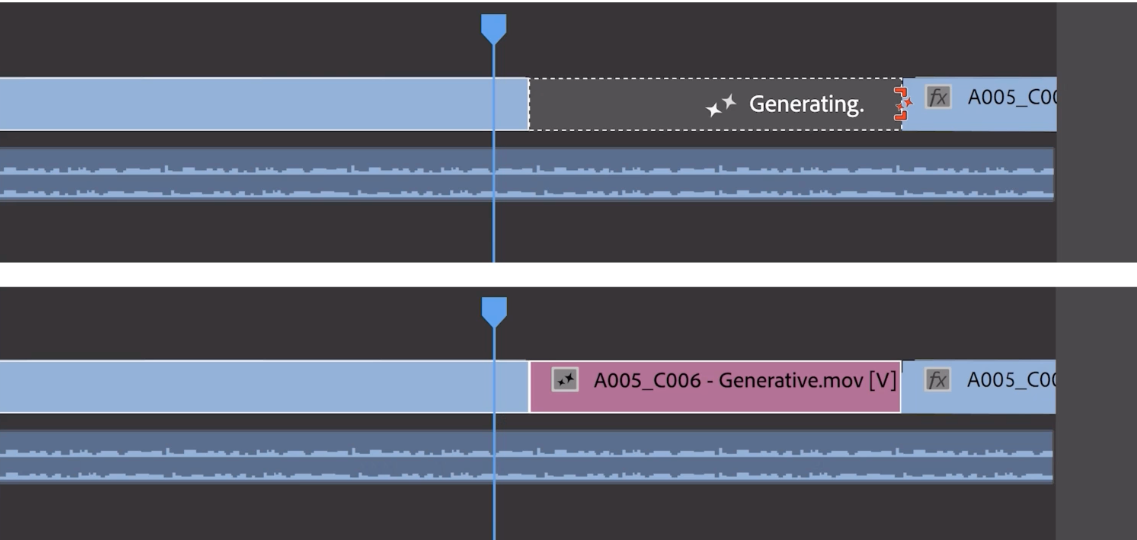

As for Generative Extend, it adds a few frames to the beginning or end of the clip (unfortunately, Adobe didn't say how many frames). Generative Extend is not intended to create an entire scene, but rather to add buffer frames to sync with a soundtrack or hold a shot for additional beats. For example, to add emotional weight.

Image credit: Adobe

To address the deepfake concerns that inevitably arise around such generative AI tools, Adobe says it is introducing content credentials (metadata to identify AI-generated media) into Premiere. Masu. Content Credentials is a media provenance standard supported by Adobe through the Content Authenticity Initiative, is already built into Photoshop, and is a component of Adobe's image generation Firefly model. Premiere not only shows you which content was generated by AI, but also which AI model was used to generate it.

I asked Adobe what data (images, videos, etc.) was used to train the model. The company did not say how (or if) it would compensate contributors to the data set.

Last week, Bloomberg reported, citing sources familiar with the matter, that photographers and artists on Adobe's stock media platform Adobe Stock were being offered up to $120 to submit short video clips to train video generation models. It was reported that he was being compensated. Compensation is said to range from about $2.62 per minute of video to about $7.25 per minute depending on what you post, with higher quality footage being charged accordingly.

This is a departure from current agreements with Adobe Stock artists and photographers, whose work Adobe uses to train image generation models. The company pays these contributors annual bonuses, rather than a one-time fee, depending on how much content they stock and how they use it. However, bonuses follow an opaque formula and are not guaranteed every year.

If the Bloomberg report is accurate, its approach is different from generative AI video rivals like OpenAI, which reportedly scraped publicly available web data, including YouTube videos, to train its models. They depict starkly contrasting approaches. YouTube CEO Neil Mohan recently said that using YouTube videos to train OpenAI's text video generator violates the platform's terms of service, making it legally vulnerable to fair use claims such as OpenAI. Emphasized gender.

Companies including OpenAI are being sued over allegations that they are violating intellectual property laws by training AI on copyrighted content without providing credits or fees to the owners. Adobe, like Shutterstock and Getty Images, which sometimes compete for generated AI (these companies also have arrangements to license training data for their models), appears to be trying to avoid this goal, with its IP indemnification policies. They seem to be positioning themselves as a verifiable “safe” option. corporate customers.

As for payments, Adobe hasn't said how much it will cost customers to use Premiere's upcoming video generation features. Pricing is probably still under consideration. However, the company has made it clear that the payment scheme will follow the generated credit system established in earlier Firefly models.

For customers with paid subscriptions to Adobe Creative Cloud, generated credits renew at the beginning of each month and range from 25 to 1,000 credits per month, depending on your plan. More complex workloads (such as images produced at high resolution or producing multiple images) generally require more credits.

The big question in my mind is: Are Adobe's AI-powered video capabilities ultimately worth the cost?

Previous Firefly image generation models have been widely derided as underwhelming and flawed compared to Midjourney, OpenAI's DALL-E 3, and other competing tools. The video model doesn't have a set release window, so I don't have much confidence that it can avoid the same fate. So is the fact that Adobe refused to show me a live demo of Add Objects, Delete Objects, and Generative Augmentation, instead insisting on a pre-recorded sizzle reel.

Perhaps to hedge the risk, Adobe says it is also talking to third-party vendors about integrating video generation models into Premiere and powerful tools such as generation extensions.

One of those vendors is OpenAI.

Adobe says it is working with OpenAI on how to bring Sora into Premiere workflows. (A partnership with OpenAI makes sense given the AI startup's recent foray into Hollywood. Indeed, OpenAI's CTO Mira Murati is scheduled to attend this year's Cannes Film Festival.) Other early partners include Pika, a startup that builds AI tools to generate and edit videos. , Runway was one of the first vendors on the market with video model generation.

An Adobe spokesperson said the company is open to working with other companies in the future.

Now, let's be clear: at this point, these integrations are more of a thought experiment than a working product. Adobe repeatedly emphasized to me that the product is not available for customers to try right away and is in an “early preview” and “research” stage.

And that captures the overall tone of Adobe's generative video presser.

With these announcements, Adobe is trying to make it clear that it's considering generative video, even if only in a tentative sense. It would be foolish not to do so. Assuming economic conditions ultimately work in Adobe's favor, missteps in the generative AI race risk losing out on valuable potential new revenue streams. (After all, training, running, and serving AI models is expensive.)

But what it presents, the concept, is frankly not very convincing. The company has a lot to prove, as Sora is in production and more innovations are sure to come in the pipeline.