Businesses are amassing more data than ever to fuel their AI ambitions, but they are also concerned about who has access to this data, which is often highly private in nature. Masu. PVML offers an interesting solution that combines his ChatGPT-like tools for data analysis with the safety guarantees of differential privacy. PVML uses Search Augmentation Generation (RAG) to provide non-mobile access to enterprise data, eliminating the need for additional security considerations.

The Tel Aviv-based company recently announced that it has raised $8 million in a seed round led by NFX, with participation from FJ Labs and Gefen Capital.

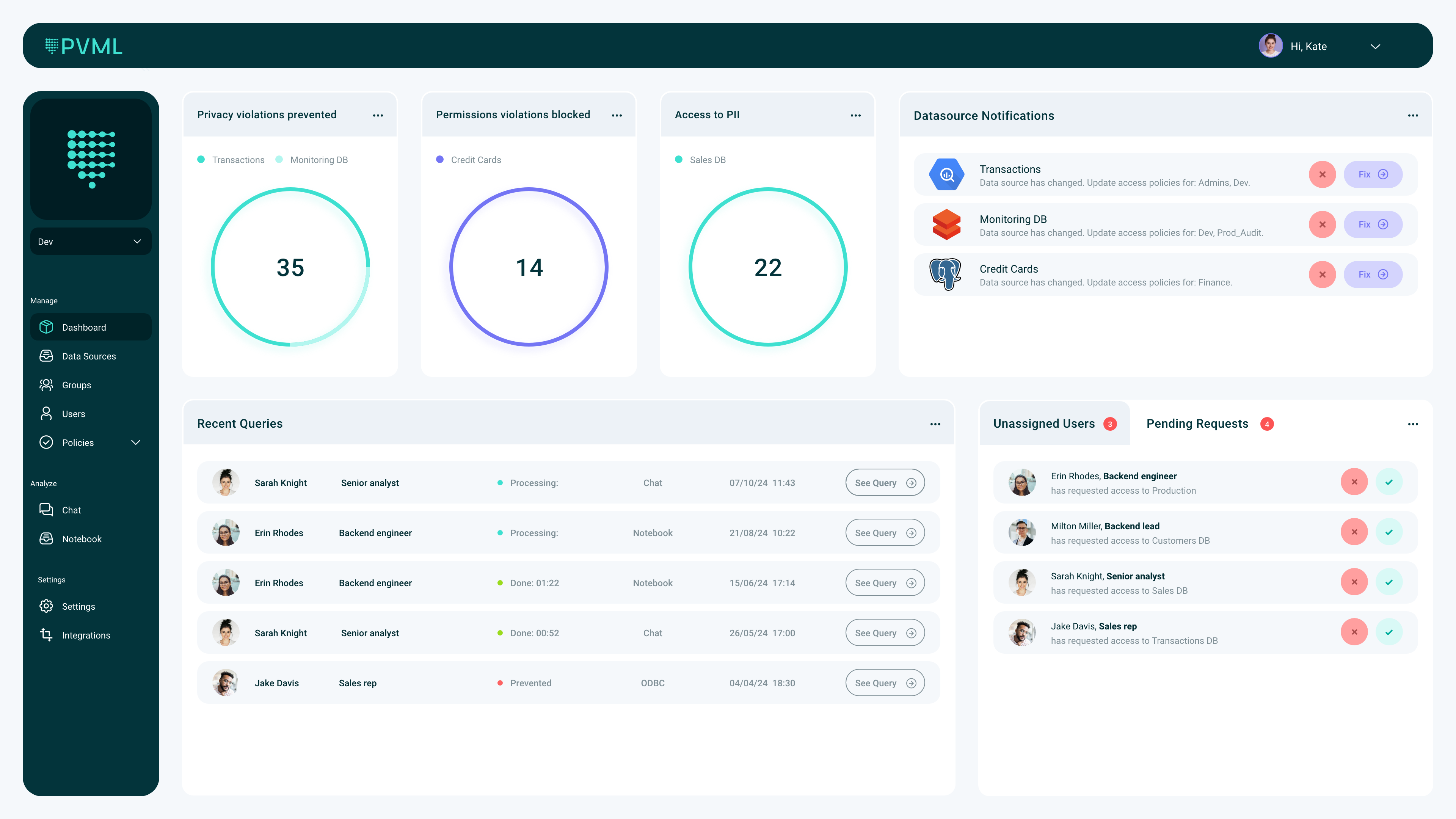

Image credit: PVML

The company was founded by husband and wife team Shachar Schnapp (CEO) and Lina Galperin (CTO). Mr. Schnapp earned his PhD in computer science, specializing in differential privacy, and then worked on his computer vision at General Motors. Meanwhile, Galperin earned a master's degree in computer science with an emphasis on AI and natural language processing and worked on machine learning projects at Microsoft.

“A lot of our experience in this field comes from working in large corporations and large corporations where we saw things not being as efficient as maybe a naive student would have expected,” Galperin said. he said. “The main value we, as PVML, want to bring to organizations is the democratization of data. This is about, on the one hand, protecting this highly sensitive data, and on the other hand, protecting the data that today is synonymous with AI. It only happens if we allow easy access to it. Everyone wants to analyze their data using free text. It's much easier, faster, and more efficient. ’s secret sauce, differential privacy, makes this integration very easy.”

Differential privacy is not a new concept. The core idea is to ensure the privacy of individual users in large datasets and provide mathematical guarantees against it. One of the most common ways to accomplish this is to introduce some randomness into the dataset in a way that does not change the data analysis.

The team argues that today's data access solutions are inefficient and generate a lot of overhead. For example, the process of making data securely accessible to employees often requires deleting large amounts of data, but some tasks (and additional tasks) do not effectively remove edited data. It may be counterproductive as it may not be usable. (The lead time to access data means real-time use cases are often not possible.)

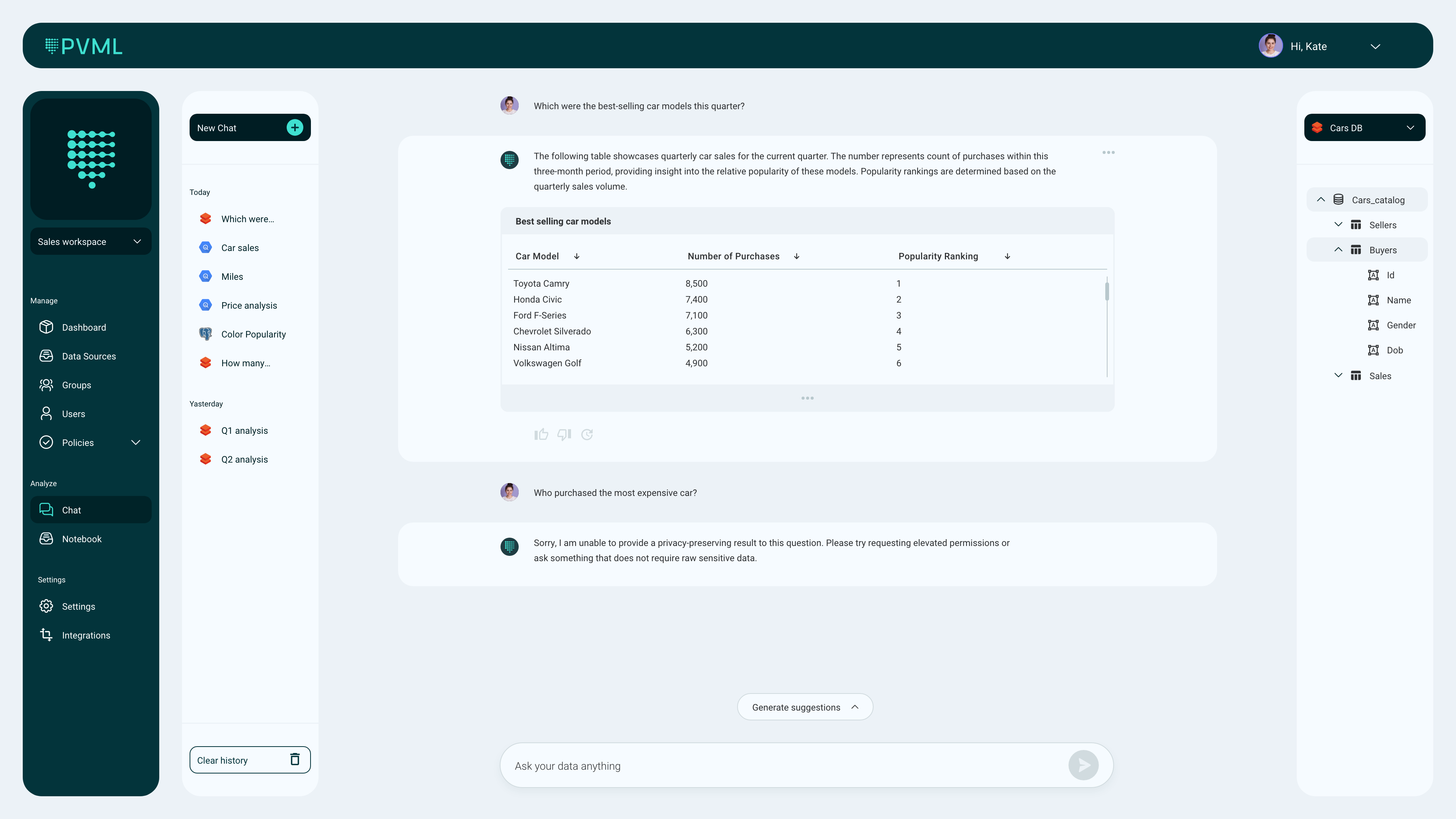

Image credit: PVML

The promise of using differential privacy means that users of PVML do not have to modify the original data. This avoids almost all overhead and safely unlocks this information for AI use cases.

Virtually every major technology company is now using differential privacy in some form, making their tools and libraries available to developers. The PVML team argues that it is still not in practice in most data communities.

“Current knowledge about differential privacy is more theoretical than practical,” Schnapp says. “We decided to put theory into practice. And that's exactly what we've done. We've developed practical algorithms that work best on data in real-world scenarios. We are developing it.”

Differential privacy efforts are completely meaningless without PVML's actual data analysis tools and platform to help. The most obvious use case here is the ability to chat with data while ensuring that sensitive data is not leaked into the chat. Using RAGs, PVML can reduce hallucinations to nearly zero, and data is kept in place with minimal overhead.

But there are other use cases as well. Schnapp and Galperin pointed out that differential privacy has enabled companies to share data across business units. Additionally, it may also be possible for some companies to monetize access to their data to third parties.

“In the stock market today, 70% of trades are performed by AI,” said Gigi Levy Weiss, General Partner and Co-Founder of NFX. “This is a harbinger of things to come, and organizations that adopt AI today will be ahead of the game tomorrow. But companies are afraid to connect their data to AI. They fear exposure, and for good reason. PVML's unique technology creates an invisible layer of protection, democratizing access to data, and monetization use cases today. We will make it possible and pave the way for tomorrow.”