Keeping up with an industry as rapidly changing as AI is a challenge. So until AI can do it for you, here's a quick recap of recent stories in the world of machine learning, as well as notable research and experiments that we couldn't cover on our own.

This week, Meta released the latest editions of the Llama series of generative AI models, Llama 3 8B and Llama 3 70B. Mehta said the model, which can analyze and write text, is “open source” and intended to be a “foundational part” of systems that developers design with their own goals in mind.

“We believe these are best-in-class open source models,” Meta wrote in a blog post. “We embrace the open source ethos of releasing early and often.”

There's just one problem. The Llama 3 model is not really “open source”, at least in the strictest definition.

Open source means developers are free to use the model in any way they choose. However, for Llama 3, like Llama 2, Meta imposes certain licensing restrictions. For example, Llama models cannot be used to train other models. Also, app developers with more than 700 million monthly users must request a special license from Meta.

The debate over the definition of open source is not new. But companies in the AI space are using the term differently, injecting fuel into a long-running philosophical debate.

Last August, a study co-authored by researchers from Carnegie Mellon University, the AI Now Institute, and the Signal Foundation found that many AI models labeled as “open source,” including Llama, have major pitfalls. found. The data required to train the model is kept private. The computational power required to run them is out of the reach of many developers. And the effort to fine-tune them is prohibitively expensive.

So if these models aren't truly open source, what exactly are they? That's a good question; defining open source in terms of AI is not an easy task.

One related open question is whether copyright, the underlying IP mechanism of open source licenses, can be applied to various components and parts of an AI project, especially the internal scaffolding of models (e.g. embeddings) . Additionally, there is a mismatch between the perception of open source and how AI actually works to overcome it. Open source was conceived in part to ensure that developers could research and modify code without restriction. However, in the case of AI, which factors need to be studied and changed are open to interpretation.

Despite all the uncertainties, Carnegie Mellon University's study reveals the inherent harm in the adoption of the term “open source” by big tech companies like Meta.

Often, “open source” AI projects like Llama end up starting the news cycle (free marketing) and providing technical and strategic advantages to the project maintainers. Open source communities rarely enjoy this same benefit, and even when they do, it pales in comparison to the benefits for maintainers.

Instead of democratizing AI, “open source” AI projects, especially those from big tech companies, tend to entrench and expand centralized power, the study co-authors said. It's good to keep this in mind the next time a major “open source” model is released.

Here are some other notable AI stories from the past few days.

Meta Updates Chatbot: Coinciding with the debut of Llama 3, Meta has upgraded its AI chatbot (Meta AI) across Facebook, Messenger, Instagram, and WhatsApp with a backend powered by Llama 3. We also released new features such as faster image generation and access to web search results. AI-generated porn: Ivan says that Meta's semi-independent policy council, the Oversight Board, has given his opinion on how the company's social platforms handle his AI-generated explicit images. I am writing about what I am paying attention to. Snap Watermark: Social media service Snap plans to add a watermark to his AI-generated images on the platform. The new watermark, a translucent version of the Snap logo with a glitter emoji, will be added to AI-generated images exported from the app or saved to your camera roll. New Atlas: Hyundai's robotics company Boston Dynamics has announced Atlas, the next generation humanoid robot. The robot is all-electric, as opposed to its hydraulically powered predecessor, and is much more friendly in appearance. Humanoids on humanoids: Boston Not to be outdone by his dynamics, Mobileye founder Amnon Shashua has launched a new startup, Menteebot, focused on building bipedal robotic systems. A demo video shows a Menteebot prototype walking up to a table and picking up a piece of fruit. Reddit, Translation: In an interview with Amanda, Reddit CPO Pali Bhat talked about AI-powered language translation features and historically trained assistance from Reddit moderators to bring the social network to a more global audience. Revealed that moderation tools are in the works. decisions and actions. AI-generated her LinkedIn content: LinkedIn has secretly started testing a new way to increase revenue. It's a subscription to LinkedIn Premium Company Pages. This costs a hefty $99 per month, but includes an AI to create the content and a set of content. A tool to increase the number of followers. Bellweather: Google's parent company Alphabet's moonshot factory, X, this week announced Project Bellweather, its latest effort to apply technology to some of the world's biggest problems. This means using AI tools to identify natural disasters like wildfires and floods as quickly as possible. Protecting children with AI: Ofcom, the UK regulator responsible for enforcing online safety laws, is using AI to proactively detect and remove illegal content online, specifically to protect children from harmful content. will begin investigating how AI and other automated tools can be used. OpenAI has landed in Japan: OpenAI is expanding to Japan, opening a new Tokyo office and planning his GPT-4 model optimized for Japanese only.

More machine learning

Image credit: DrAfter123 / Getty Images

Can a chatbot change your mind? Swiss researchers have found that not only can it do so, but if it knows personal information about you in advance, it can do so better than a human with the same information. found that it actually increases your persuasiveness in arguments.

“This is Cambridge Analytica on steroids,” said Robert West, project leader at EPFL. Researchers believe that this model (in this case GPT-4) may have drawn from a large body of online discussion and fact storage to present a more convincing and confident case. I am. But the results speak for themselves. Don't underestimate the power of LLM when it comes to persuasion, West warned. “Given the upcoming U.S. election, people are concerned because that's always where this kind of technology is first tested in the field. One thing we know for sure is that people will try to use the power of large-scale language models to sway elections.”

But why are these models so good for languages? This is one area that has a long history of research going back to ELIZA. Check out this profile of Stanford University's Christopher Manning. He has just been awarded the John von Neumann Medal. congratulations!

In a provocatively titled interview, Stuart Russell, another long-time AI researcher (who also appeared on stage at TechCrunch), and postdoctoral fellow Michael Cohen discuss how to prevent AI from killing us all. I'm speculating about what to do. Perhaps it's good to understand sooner rather than later! However, this is not a superficial discussion. Smart people are talking about how we can actually understand the motivations (if that's the right word) of AI models, and how we should build regulation around them.

This interview is actually about a paper published earlier this month in the journal Science in which they talk about advanced AI (what they call “long-term planning agents”) that can act strategically to achieve goals. suggests that it may be impossible to test. Essentially, once you learn to “understand” the tests your model needs to pass in order to succeed, you're much more likely to learn how to creatively negate or avoid those tests. We've seen it on a small scale, so why not on a large scale?

Mr. Russell proposes limiting the hardware needed to manufacture such drugs…but, of course, Los Alamos National Laboratory and Sandia National Laboratory have just been delivered. LANL just held a ribbon-cutting ceremony for Venado, a new supercomputer for AI research comprised of 2,560 Grace Hopper Nvidia chips.

Researchers are working on new neuromorphic computers.

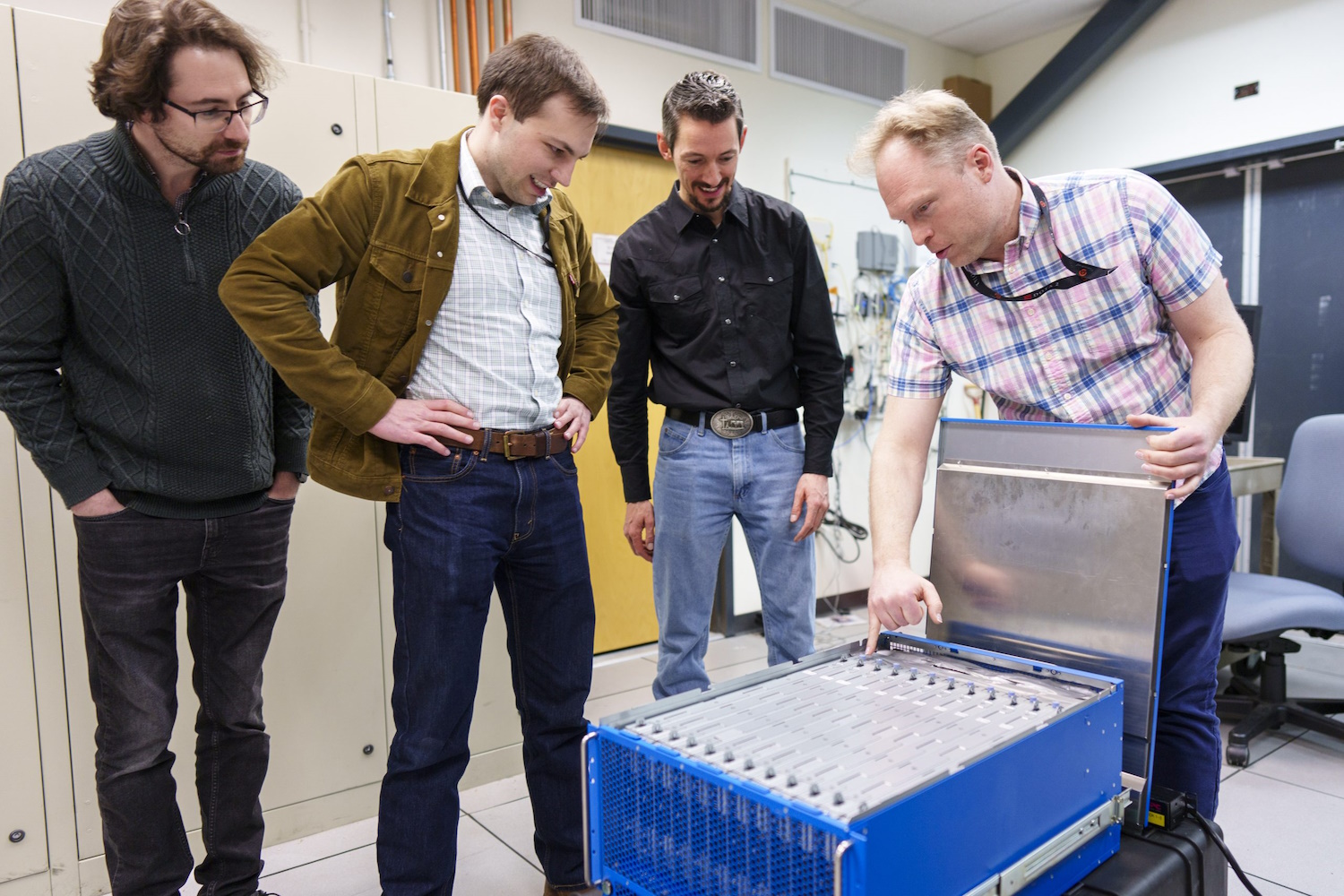

And Sandia is building an “extraordinary brain-based computing system called HaraPoint” with 1.15 billion artificial neurons built by Intel and believed to be the largest such system in the world. I just received it. Neuromorphic computing, as the name suggests, is not intended to replace systems like Venado, but instead pursues new ways of computing that are more similar to the brain than the statistics-heavy approaches seen in modern models. It is intended to.

“This billion-neuron system will enable us to develop both new AI algorithms that are potentially more efficient and smarter than existing algorithms, as well as new brain-like approaches to existing computer algorithms such as optimization and modeling, at scale. “It gives us the opportunity to innovate,” he said. Sandia researcher Brad Aimone. It's dandy…just dandy!