Last October, a research paper published by Google data scientist Matej Zaharia, CTO of Databricks, and Professor Peter Abbeel of the University of California, Berkeley, proposed a GenAI model, a model along the lines of OpenAI's GPT-4 and ChatGPT. , a method was proposed that could capture more data. Data is now more accessible than ever before. In this study, the co-authors believe that by removing a major memory bottleneck in AI models, the models can process millions of words instead of hundreds of thousands, the maximum number of most capable models at the time. We have proven that it will.

AI research seems to be progressing rapidly.

Today, Google announced the release of Gemini 1.5 Pro, the newest member of the Gemini family of GenAI models. Designed as a drop-in replacement for Gemini 1.0 Pro (previously called “Gemini Pro 1.0” for reasons known only to Google's labyrinthine marketing department), Gemini 1.5 Pro has improved compared to its predecessor. has been improved in many ways, and probably in most ways. The amount of data that can be processed varies greatly.

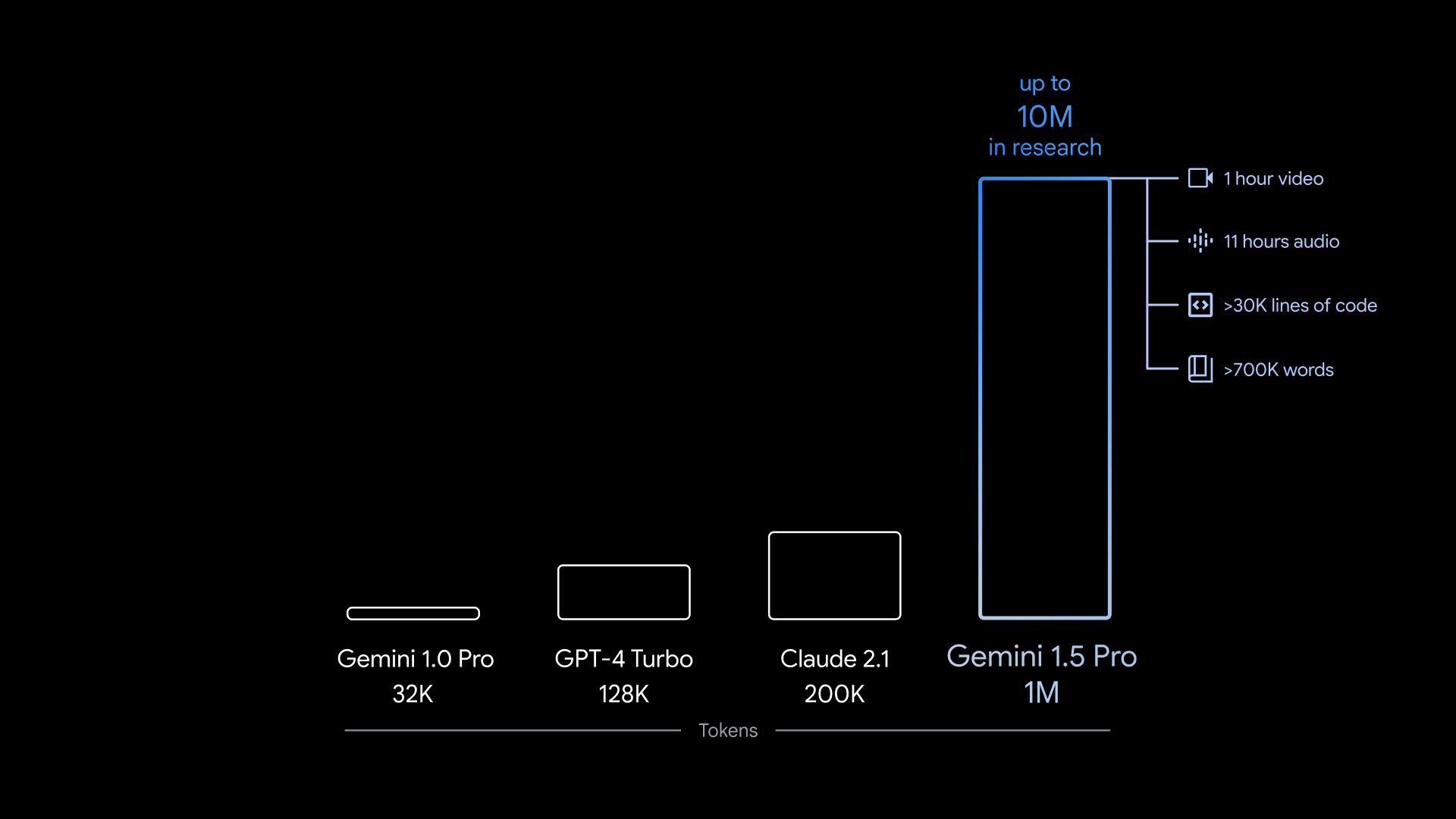

Gemini 1.5 Pro can capture approximately 700,000 words, or approximately 30,000 lines of code. This is 35 times more than what Gemini 1.0 Pro can handle. And the model is multimodal, so it's not limited to text. Gemini 1.5 Pro can capture up to 11 hours of audio or 1 hour of video in various languages.

Image credits: Google

To be clear, this is an upper limit.

The version of Gemini 1.5 Pro, available to most developers and customers starting today (in limited preview), can only process up to 100,000 words at a time. Google has characterized the data-intensive Gemini 1.5 Pro as “experimental,” making it available only to approved developers to try it out via the company's GenAI development tool AI Studio as part of a private preview. There is. Some customers using Google's Vertex AI platform also have access to Gemini 1.5 Pro, which allows for massive data entry, but not all.

Still, Google DeepMind's vice president of research, Oriol Vinyals, hailed it as an achievement.

“When I talk to you, [GenAI] “For a model, the information you're inputting and outputting is the context, and the longer and more complex the questions and interactions, the more context the model needs to be able to handle,” Vinyals said at a press conference. “We unraveled the long context in a fairly large-scale way.”

larger context

A model's context, or context window, refers to the input data (such as text) that the model considers before producing output (such as additional text). A simple question – “Who won the 2020 US presidential election?” – serves as context, as does a movie script, email, or e-book.

Models with small context windows tend to “forget” even the most recent conversations, often going off topic and in problematic ways. This is not necessarily true for models with large contexts. As an added benefit, large-scale context models can better capture the narrative flow of the data they ingest and, at least hypothetically, generate more context-rich responses.

Other attempts and experiments have been made with models that have unusually large context windows.

Last summer, AI startup Magic claimed to have developed a large-scale language model (LLM) with a 5 million-token context window. His two papers published in the past year detail his architecture, ostensibly a model that can scale to 1 million tokens, and beyond. (A “token” is a subdivision of raw data, like the syllables “fan,” “tas,” and “tic” in the word “fantastic.”) And recently, Meta, Massachusetts Institute of Technology, A group of scientists from Carnegie Mellon University says this technique completely removes the model context window size constraint.

However, Google is the first to make a model with a context window of this size commercially available, surpassing previous leader Anthropic's 200,000-token context window if the private preview is considered commercially available. I did.

Image credits: Google

Gemini 1.5 Pro has a maximum context window of 1 million tokens, and the more widely available version of the model has the same 128,000 token context window as OpenAI's GPT-4 Turbo.

So what can you accomplish with a million-token context window? Google can analyze entire code libraries, “reason” long documents such as contracts, have long conversations with chatbots, and analyze the content of videos. It promises a lot of things, including comparisons.

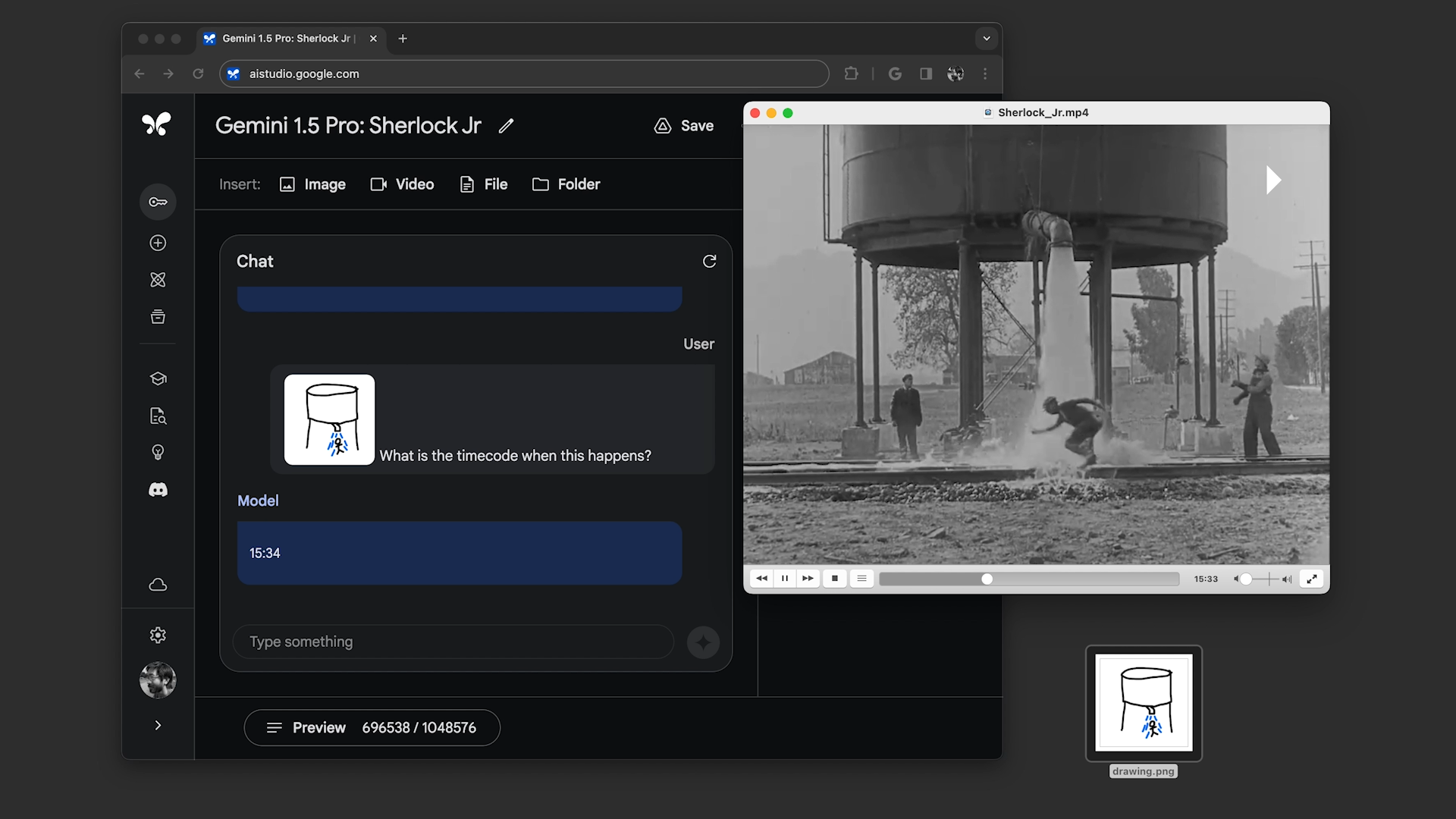

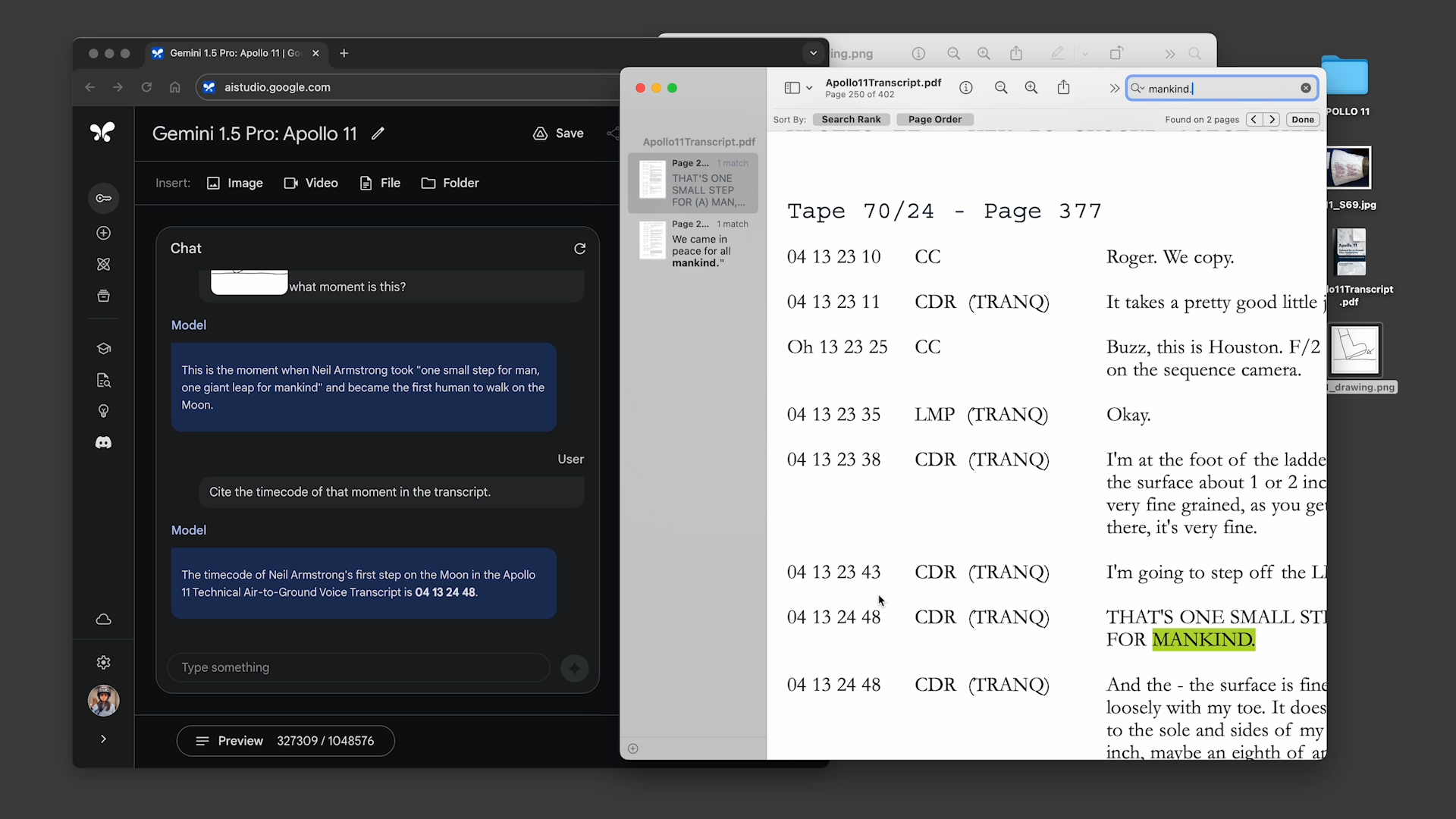

During the briefing, Google showed off two pre-recorded demos of Gemini 1.5 Pro with a 1 million token context window enabled.

In the first experiment, the demonstrator asked Gemini 1.5 Pro to search the transcript of the Apollo 11 moon landing television broadcast (approximately 402 pages) for quotes that contained jokes, and then searched the transcript of the Apollo 11 moon landing television broadcast (approximately 402 pages) for quotes that contained jokes within the television broadcast, resembling pencil sketches. I asked them to find a scene. . In the second, the demonstrator asked the model to search for a scene from the Buster Keaton movie “Sherlock Jr.” based on the description and another sketch.

Image credits: Google

The Gemini 1.5 Pro successfully completed all the tasks I asked of it, but it wasn't all that fast. Each process took him about 20 seconds to 1 minute. This was much longer than the average ChatGPT query.

Image credits: Google

Vinyals says that latency will improve once the model is optimized. The company is already testing a version of Gemini 1.5 Pro. 10 million tokens Context window.

“Latency aspects [is something] We are … working on optimization, but this is still in the experimental, research phase,” he said. “So we can say these issues exist just like any other model.”

I'm not sure the delay is appealing to that many people. Especially for paying customers. Having to wait several minutes at a time to search the entire video is not pleasant. It also doesn't seem to be very scalable in the short term. And I'm worried about how the latency will show up in other applications, like chatbot conversations or codebase analysis. Viñals didn't say anything, but that doesn't inspire much confidence.

As my more optimistic colleague Frédéric Lardinova pointed out: whole The time savings alone might be worth the twiddling of your thumbs. But I think it depends a lot on the use case. Is it to identify plot points in the show? Probably not. But how can you find a suitable screenshot from a movie scene that you only vaguely remember? Maybe.

Other improvements

Gemini 1.5 Pro brings other quality of life upgrades beyond the expanded context window.

Google says Gemini 1.5 Pro will be “comparable” in terms of quality to the current version of Google's flagship GenAI model, Gemini Ultra, thanks to a new architecture consisting of a smaller, specialized “Expert” model. claims. Gemini 1.5 Pro essentially splits tasks into multiple subtasks, delegates them to the appropriate expert models, and decides which tasks to delegate based on its own predictions.

MoE is not new and has been around in some form for many years. However, its efficiency and flexibility have made it popular among model vendors (see: Model Powering Microsoft's Language Translation Services).

Now, the expression “equivalent quality” is a bit ambiguous. Quality when it comes to GenAI models, especially multimodal models, is difficult to quantify, and that quality is even higher when the models are gated behind private previews that exclude the press. Unsurprisingly, Google claims that in the benchmarks it uses, the Gemini 1.5 Pro performs at a “nearly similar level” compared to the Ultra. While developing the LLM Outperforms Gemini 1.0 Pro in 87% of them benchmark. (Note that performance above Gemini 1.0 Pro is a low bar. )

Pricing is a big question mark.

Gemini 1.5 Pro with a 1 million token context window is free to use during a private preview period, Google said.However, the company plans to introduce Pricing tiers in the near future will start at the standard 128,000 context window and extend up to 1 million tokens.

You have to imagine that expanding the context window won't come cheap, but Google's choice not to reveal the price at the briefing didn't allay fears. If the pricing matches Anthropic's price, it could cost $8 per million prompt tokens and $24 per million generated tokens. But perhaps it will be even lower. Something strange happened! We'll have to wait and see.

I'm also curious to see how it affects the rest of the Gemini family, primarily the Gemini Ultra. Can we expect the upgrades for the Ultra model to be roughly the same as the upgrades for the Pro? Or, as is the case today, will the available Pro model be more expensive than the Ultra model, which Google still touts as the top of the Gemini portfolio? Will there always be those pesky periods where the performance is better?

If you're feeling charitable, chalk it up to teething problems. If not, just call it what it is. “I'm so confused.”