The European Parliament on Wednesday voted to adopt the AI Act, giving the European Union a leg up in setting rules for a wide range of software that uses artificial intelligence, in what the region's lawmakers are calling “the world's first comprehensive AI law.” secured a top position.

MEPs overwhelmingly supported the interim agreement reached in December in tripartite consultations with the Council, with 523 votes in favor to 46 against (49 abstentions).

This landmark law establishes a risk-based framework for AI. Apply different rules and requirements depending on the level of risk involved in your use case.

Today's full parliamentary vote follows a positive vote in the committee and an interim agreement last month that was backed by all 27 ambassadors of EU member states. The outcome of the plenary session means that the AI Act is on track to be enacted soon across the region, with final approval still remaining from the council.

🇪🇺Democracy: 1️⃣ | Lobby: 0️⃣

We welcome the overwhelming support from the European Parliament for our policy. #AIAct —The world's first comprehensive and binding rules for trustworthy AI.

Europe is now a global standard setter in AI.

We regulate as little as possible, but as much as necessary. pic.twitter.com/t4ahAwkaSn

— Thierry Breton (@ThierryBreton) March 13, 2024

The AI law will be published in the EU's Official Journal in the coming months and will come into force 20 days later. There will be a phased implementation, but the first subset of provisions (prohibited use cases) will be affected after six months. Some people apply after 12, 24, or 36 months. Therefore, full implementation is not expected until mid-2027.

On the enforcement side, if the ban on prohibited uses of AI is breached, fines for non-compliance can extend to 7% of global annual turnover (or 35 million euros for more). On the other hand, violations of other regulations regarding AI systems could result in fines of up to 3% (or 15 million euros). If you do not cooperate with the regulator, you risk a fine of up to 1%.

In a debate on Tuesday ahead of the plenary vote, Dragos Tudras, a member of the European Parliament and co-rapporteur on the AI Act, said: And with that alone, the AI Act pushed the future of AI in a human-centric direction. Towards a direction in which humans control technology and technology helps harness new discoveries, economic growth, social progress, and unlock human potential. ”

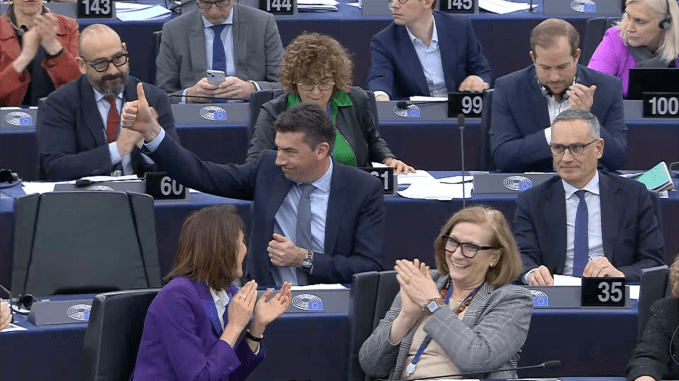

Dragos Tudrače, co-rapporteur on the AI Act, expresses support for the European Parliament plenary vote (Screen capture: Natasha Lomas/TechCrunch)

This risk-based proposal was first presented by the European Commission in April 2021. It was subsequently significantly amended and expanded by the EU's co-members in Parliament and the Council through a multi-year negotiation process, which ultimately resulted in a political agreement. After the marathon final meeting in December.

The law deems some potential AI use cases to be an “unacceptable risk” and bans them completely (such as social scoring and subconscious manipulation). The law also defines a range of “high-risk” applications, such as AI used in education, employment, and remote biometric identification. These systems must be registered and their developers must comply with the risk and quality control provisions set by law.

Under the EU's risk-based approach, most AI apps are considered low-risk, not subject to strict rules, and exempt from the law. But the law also imposes some (light-touch) transparency obligations on his third subset of apps, including AI chatbots. Generative AI tools that can create synthetic media (aka deepfakes). and General Purpose AI Model (GPAI). The most powerful GPAI will face additional rules if it is classified as having so-called “systemic risk.” This imposes risk management obligation hurdles.

The GPAI rules are a late addition to the AI Act, driven by concerned legislators. Last year, members of Congress proposed a tiered system of requirements aimed at ensuring that the wave of advanced models responsible for the recent boom in generative AI tools doesn't escape regulation. .

However, a small number of EU member states, led by France, have gone in the opposite direction, fueled by lobbying by homegrown AI startups (such as Mistral), arguing that Europe should focus on scaling up and The government pressed for regulations on AI model makers. To keep up in the global AI race, we crown national champions in rapidly evolving fields.

A political compromise reached by lawmakers in December in the face of intense lobbying watered down their original proposal to regulate GPAI.

Although a complete carve-out from the law was not granted, most of these models will face only limited transparency requirements. Only his GPAI, which used more than 10^25 FLOPs of computational power in training, needs to perform risk assessment and mitigation for the model.

Since the compromise agreement, it has also been revealed that Mistral has received investment from Microsoft. The US tech giant owns a much larger stake in OpenAI, the US-based ChatGPT maker.

At a press conference ahead of today's plenary vote, the co-rapporteurs were asked about Mistral's lobbying efforts and whether the startup had succeeded in weakening EU rules on GPAI. “I think we can agree that the results speak for themselves,” Brand Benifay replied. “The law clearly defines the safety needs of the most powerful models with clear standards…I think we have achieved a clear framework that ensures transparency and safety requirements for the most powerful models.”

Tudras also rejected suggestions that lobbyists had a negative impact on the final form of the law. “We negotiated and made compromises that we thought were reasonable,” he said, calling the result a “necessary” balance. “The company's actions and choices are its own decisions and do not have any impact on its operations.”

“For everyone developing these models, there was an interest in maintaining a 'black box' in terms of the data that goes into these algorithms,” he added. “On the other hand, we promoted the idea of transparency, especially with respect to copyrighted material, because we thought that was the only way to impact the rights of authors out there.”

Benifei also pointed to the addition of environmental reporting obligations to the AI Act as a further victory.

The MPs added that the AI Act represents the beginning of the EU's journey towards AI governance. Mr. Benifay pointed to the need for a directive to enact AI legislation, stressing that the model needs to evolve and be extended by additional legislation in the future. Using AI in the workplace.

Efforts are also needed to strengthen conditions for investing in AI, he said. “We want Europe to invest in artificial intelligence. To do more common research. To further strengthen the sharing of computing power. The work of supercomputers… the need to complete the capital markets union. It's also important to emphasize that we need to be able to invest in AI with more money. [ease] than today. There is currently a risk that some investors prefer to invest in the United States over other European countries or other European companies. ”

“This law is just the beginning of a longer journey, because AI will have an impact that this AI law alone cannot measure. It will also impact our education system and society. 'The labor market will have an impact on the war,' Tudras added. “So there's a whole new world opening up there where AI will play a central role, and so from this point on, we're also going to build governance around this law, so we're going to Pay close attention to the evolution of technology, and be prepared to respond to new challenges that may arise from this technology evolution.”

Last year, Tudrace reiterated his call for broader collaboration on AI governance among like-minded governments, as long as a consensus can be reached.

“We still have an obligation to try to be as interoperable as possible, which means being open to building governance with as many democracies and as many like-minded partners as possible.” Because no matter what quarter of the world you are in, technology is one thing, so we need to invest in integrating this governance into a meaningful framework.”