Firefly, Adobe's family of generative AI models, doesn't have a great reputation among creators.

Firefly's image generation model, especially when compared to Midjourney, OpenAI's DALL-E 3, and other rivals, has been ridiculed as underwhelming and flawed, with a tendency to distort limbs and landscapes and miss the nuances of prompts. It has been. But Adobe is looking to turn things around this week with its third-generation model, Firefly Image 3, which will be released during the company's Max London conference.

Now available in Photoshop (beta) and Adobe's Firefly web app, this model outperforms its predecessor (Image 2) and its predecessor (Image 2) thanks to its ability to understand longer and more complex prompts and scenes. Image 1) Produces a more “realistic” image. Lighting and text generation features have also been improved. It should be able to more accurately render typography, iconography, raster images, line drawings, etc., and is “remarkably” skilled at depicting dense crowds and people with “detailed features” and “various moods and expressions.” , says Adobe.

Bottom line: Image 3 appears to be a step up from Image 2 in my quick unscientific tests.

I wasn't able to try image 3 myself. However, Adobe PR sent me some output and prompts from the model, so I was able to run the same prompts on image 2 on the web and get a sample to compare with the output in image 3. (Please note that the output in image 3 may have been cherry-picked.)

Notice the lighting in this headshot in image 3 and below it in image 2.

From image 3. Prompt: “Studio Portrait of a Young Woman.”

Same prompt as above (image 2).

To my eye, the output in image 3 looks more detailed and lifelike, with some shadows and contrast that are rarely seen in the sample in image 2.

Below is a series of images that illustrate the understanding of the scene in Image 3.

From image 3. Prompt: “An artist sits at his desk in his studio, lost in thought as he looks at his vast paintings and fantastical objects.”

Same prompt as above. From image 2.

Note that the sample in Image 2 is fairly basic in terms of level of detail and overall expressiveness compared to the output in Image 3. There's something odd about the subject in the sample shirt (around the waist) in image 3, but the pose is more complex than the subject in image 2 (and the clothes in image 2 are also a little off).

Some of Image 3's improvement can definitely be attributed to its larger and more diverse training data set.

Like Image 2 and Image 1, Image 3 is trained for uploading to Adobe Stock, Adobe's royalty-free media library, and licensed public domain content with expired copyright. . Adobe Stock is constantly growing, and as a result, so are the available training datasets.

In an effort to avoid lawsuits and position itself as a more “ethical” alternative to generative AI vendors (OpenAI, Midjourney, etc.) that train images indiscriminately, Adobe is adding Adobe to its training datasets. We have a program to pay eStock contributors. (Note, however, that the program's terms are fairly opaque.) Controversially, Adobe also uses AI-generated images to train Firefly models, which can be used as data Some consider it a form of laundering.

A recent Bloomberg report revealed that AI-generated images in Adobe Stock are not excluded from the training data for the Firefly image generation model, but these images contain regurgitated copyright material. It's an alarming prospect given the possibilities. Adobe defended this practice, saying the AI-generated images represent only a small portion of the training data and go through a management process to ensure they don't reference trademarks, recognizable characters, or artist names.

Of course, neither diverse and more “ethically” sourced training data nor content filters or other safeguards guarantee a completely defect-free experience. See what users generate. people flipping birds Use image 2. The real test of Image 3 will be after the community gets its hands on it.

New features powered by AI

Image 3 powers several new features in Photoshop beyond enhanced text-to-image conversion.

Image 3's new “Style Engine” and new auto-styling toggles allow models to generate a wider range of colors, backgrounds, and subject poses. These are fed into the reference image. This option allows users to condition the model based on images whose colors and tones they want to match with future generated content.

Three new generation tools (Generate Background, Generate Similarity, and Enhance Detail) leverage Image 3 to precisely edit images. (Self-descriptive) background generation replaces the background with a generated background that blends into the existing image, while analogous generation provides variation to selected parts of the photo (such as people or objects). When it comes to detail enhancement, it “tweaks” the image to improve sharpness and clarity.

If these features seem familiar, it's because they've been in beta in the Firefly web app for at least a month (much longer in Midjourney). This marks Photoshop's debut in beta.

When it comes to web apps, Adobe isn't ignoring this alternative route to AI tools.

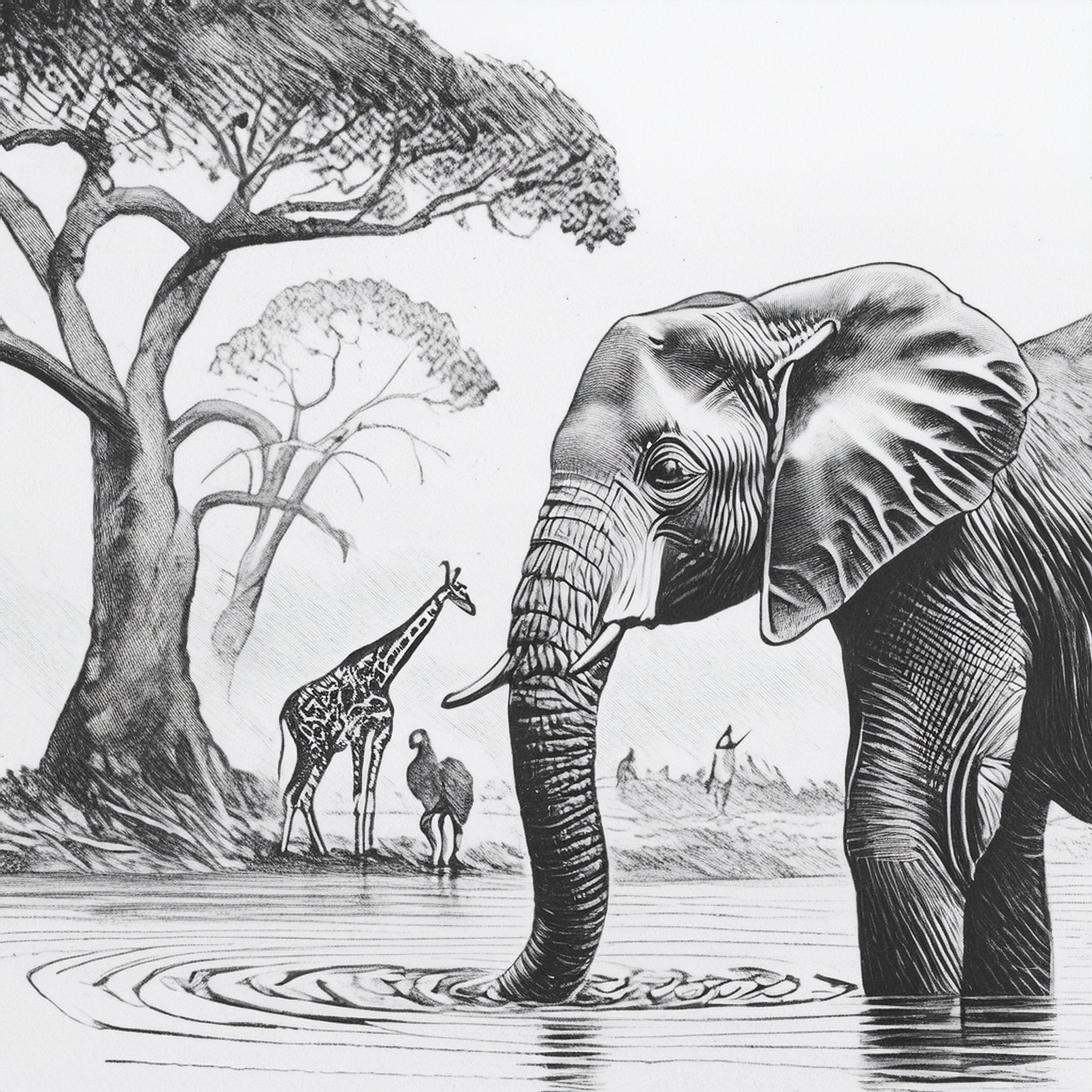

With the release of Image 3, Firefly web apps get Structure Reference and Style Reference. This is what Adobe is touting as a new way to “advance creative control.” (Both were announced in his March, but are now widely available.) Structure Reference allows users to create new images (for example, racecars) that match the “structure” of the reference image. front view). A style reference is essentially a style transfer by another name that mimics the style of the target image (such as a pencil sketch) while preserving the content of the image (such as an elephant on an African safari).

Here are the actual structure references.

Original image.

Converted using structural references.

Style reference:

Original image.

Converted with style references.

I asked Adobe if the Firefly image generation price changes with every upgrade. Currently, the cheapest Firefly Premium plan costs $4.99 per month, undercutting competitors like Midjourney ($10 per month) and OpenAI (behind DALL-E 3 with a $20 per month ChatGPT Plus subscription).

Adobe said the current tier and generation credit system will remain in place for the time being. He also said that the company's indemnification policy, in which Adobe pays copyright claims related to Firefly-generated works, and its approach to watermarking AI-generated content remain unchanged. Content credentials (metadata that identifies AI-generated media), whether generated from scratch or partially edited using generation capabilities. It will continue to be automatically attached to all Firefly image generation on the web and Photoshop.