Anthropic, an AI startup backed by hundreds of millions in venture capital (and likely hundreds of millions more soon), today announced Claude, the latest version of its GenAI technology. And the company claims it's comparable to OpenAI's GPT-4 in terms of performance.

Anthropic's new Claude 3, called GenAI, is a family of models: Claude 3 Haiku, Claude 3 Sonnet, and Claude 3 Opus, with Opus being the most powerful. Anthropic claims that all offer “improved capabilities” in analytics and prediction, including GPT-4 (but not GPT-4 Turbo) and Google's Gemini 1.0 Ultra (but not Gemini 1.5 Pro). It shows improved performance on certain benchmarks compared to the model.

Notably, Claude 3 is Anthropic's first multimodal GenAI, meaning it can analyze text as well as images, similar to GPT-4 and some flavors of Gemini. Claude 3 can process photos, charts, graphs, technical diagrams, and draw from PDFs, slideshows, and other types of documents.

As a step up over some GenAI rivals, Claude 3 can analyze multiple images (up to 20) in a single request. This allows comparison and contrast of images, he says Anthropic.

However, Claude 3's image processing has its limits.

Anthropic makes the model unable to identify people. There is no doubt that you are wary of the ethical and legal implications. And the company claims that the Claude 3 is prone to making mistakes with “low-quality” images (less than 200 pixels), and is unable to provide accurate information for spatial reasoning (such as reading an analog clock face) or object counting. ) admits to struggling with tasks that involve number of objects in the image).

Image credit: Anthropic

Claude 3 also does not produce any artwork. At least for now, the model is strictly image analysis.

Anthropic said customers generally find that Claude 3 follows multi-step instructions better than previous versions, produces structured output in formats such as JSON, and is easier to use in languages other than English. He says he can look forward to a conversation. Anthropic says Claude 3 should also refuse to answer questions less often, thanks to a “more nuanced understanding of requests.” And soon, Claude 3 will be able to cite the source of the answer to the question so that users can verify the question.

“Claude 3 tends to produce more expressive and engaging responses,” Anthropic writes in a support article. “[It’s] Easy to prompt and maneuver compared to traditional models. Users will find that they can achieve the desired results by using shorter, more concise prompts. ”

Some of these improvements come from Claude 3's expanded context.

A model's context, or context window, refers to the input data (such as text) that the model considers before producing output. Models with small context windows tend to “forget” even the most recent conversations, often going off topic and in problematic ways. As an added benefit, large-scale context models can better understand the narrative flow of the data they ingest and generate more context-rich responses (at least hypothetically).

According to Anthropic, Claude 3 will initially support a 200,000-token context window, which is approximately 150,000 words, and some customers will support a 1 million-token context window (approximately 700,000 words). This is on par with Google's latest GenAI model, the aforementioned Gemini 1.5 Pro, which also offers up to 1 million context windows.

Just because Claude 3 is an upgrade over its predecessor doesn't mean it's perfect.

In its technical white paper, Anthropic acknowledges that Claude 3 is not immune to the problems that plague other GenAI models: bias and hallucinations (i.e., hoaxes). Unlike some of his GenAI models, Claude 3 cannot search his web. The model can only answer questions using data before August 2023. Also, while Claude is multilingual, he is not as fluent in certain “low resource” languages as he is in English.

However, Anthropic is promising frequent updates to Claude 3 in the coming months.

“We don't think we are nearing the limits of model intelligence. [enhancements] We will be making it available to the Claude 3 model family in the coming months,” the company wrote in a blog post.

Opus and Sonnet are currently available on the web, via Anthropic's development console and API, Amazon's Bedrock platform, and Google's Vertex AI. Haiku will also follow later this year.

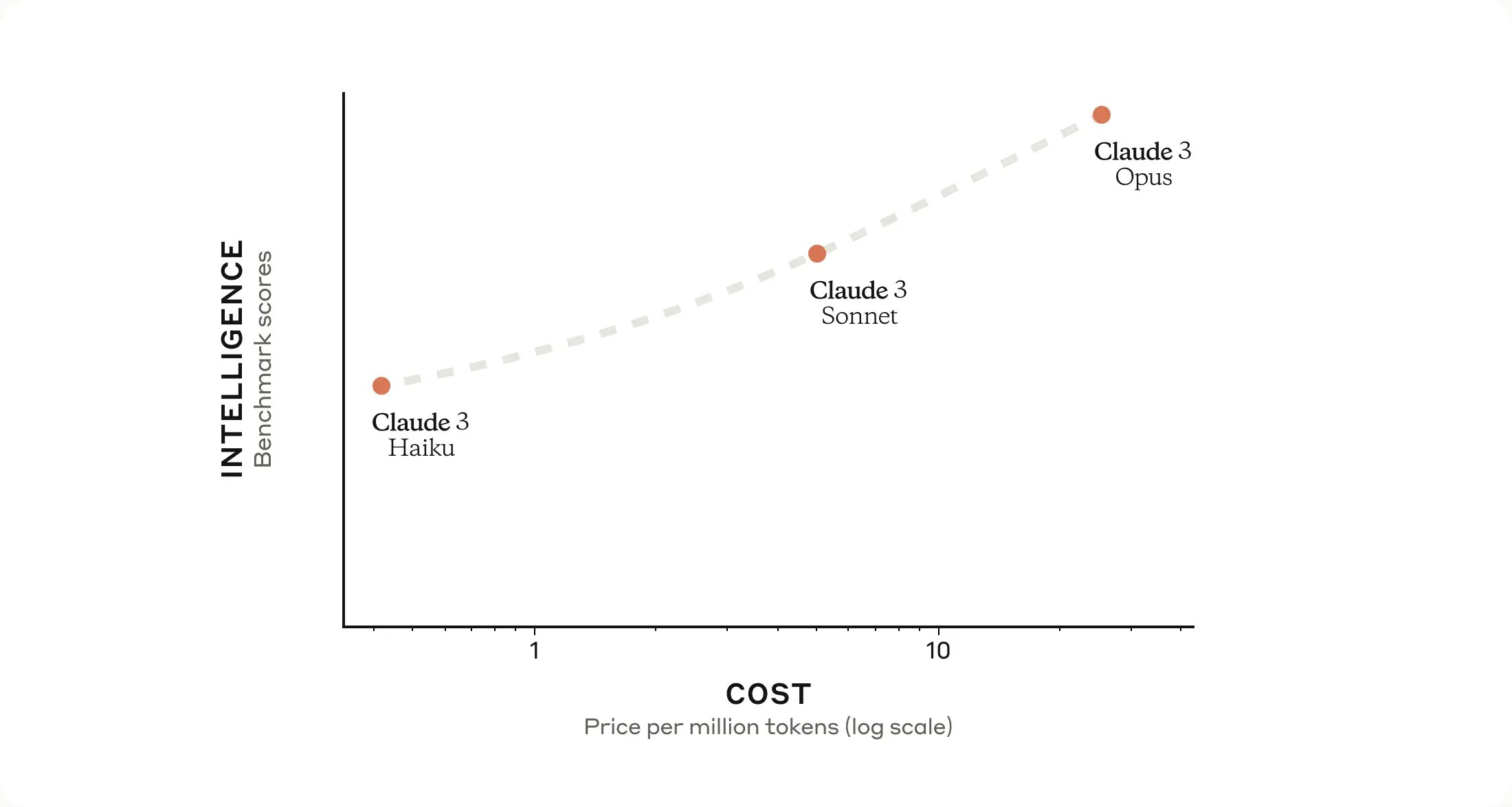

The breakdown of charges is as follows:

Opus: $15 per million input tokens, $75 per million output tokens Sonnet: $3 per million input tokens, $15 per million output tokens Haiku: $0.25 per million input tokens, $1.25 per million output tokens dollar

That's Claude 3. But what’s the view at 30,000 feet?

Now, as we previously reported, Anthropic's ambition is to create “the next generation of algorithms for AI self-learning.” Such algorithms can be used to build virtual assistants that can reply to emails, perform research, generate art, books, etc. Some of these are already being trialled, such as GPT-4 and other large-scale language models.

Anthropic teased this in the aforementioned blog post, saying that Claude 3 will have enhanced out-of-the-box features, including the ability to interact with other systems, interactive coding, and “more advanced agent features.” They say they plan to add features to Claude 3. ”

This last part is a form of software agent that automates complex tasks, such as transferring data from documents to spreadsheets for analysis or automatically filling out expense reports and inputting them into accounting software. Reminds me of OpenAI's ambition to build. OpenAI already offers an API that allows developers to build “agent-like experiences” into their apps, and Anthropic seems keen to provide similar functionality.

Will we see an Anthropic image generator next? Frankly, that would surprise me. Image generators have been the subject of a lot of controversy lately, mainly for reasons related to copyright and prejudice. Google was recently forced to disable its image generator for injecting diversity into photos with a farcical disregard for historical context, and many image generator vendors have profited from their work by training GenAI. He is engaged in a legal battle with an artist who accuses him of cheating. without providing any credit or compensation.

I'm interested in the evolution of Anthropic's technology “Constitutional AI” for training GenAI. The company claims this makes it easier to understand the model's behavior and make adjustments if necessary. Constitutional AI aims to provide a way to tailor AI to human intent, with models responding to questions and performing tasks using a simple set of guidelines. For Claude 3, for example, Anthropic said that based on customer feedback, it added constitutional principles that direct the model to be understanding and accessible to people with disabilities.

Whatever Anthropic's end goal is, it's for the long haul. According to pitch materials leaked last May, the company is aiming to raise up to $5 billion over the next year or so, which is just the baseline needed to remain competitive with OpenAI. It may just be. (Training models isn't cheap, after all.) With $2 billion and his $4 billion in capital pledged from Google and Amazon respectively, the plan is well underway.