There are a lot of things that can go wrong with GenAI, especially third-party GenAI. It makes things. It's biased and harmful. And it may violate copyright rules. According to a recent study by MIT Sloan Management Review and Boston Consulting Group, more than 55% of AI-related failures in organizations are caused by third-party AI tools.

So it's not entirely surprising that some companies are still cautious about adopting this technology.

But what if GenAI came with a guarantee?

It's a business idea that entrepreneur and electrical engineer Kartik Ramakrishnan came up with a few years ago while working as a senior manager at Deloitte. He co-founded his two “AI-first” companies, Gallop Labs and Blu Trumpet, but ultimately realized that trust and being able to quantify risk were holding back AI adoption. .

“Nearly every company today is looking for ways to implement AI to increase efficiency and catch up with the market,” Ramakrishnan told TechCrunch in an email interview. “To achieve this, many companies rely on third-party vendors and implement AI models without fully understanding the quality of their products, as AI is advancing at a very fast pace. , risks and harms are constantly evolving.”

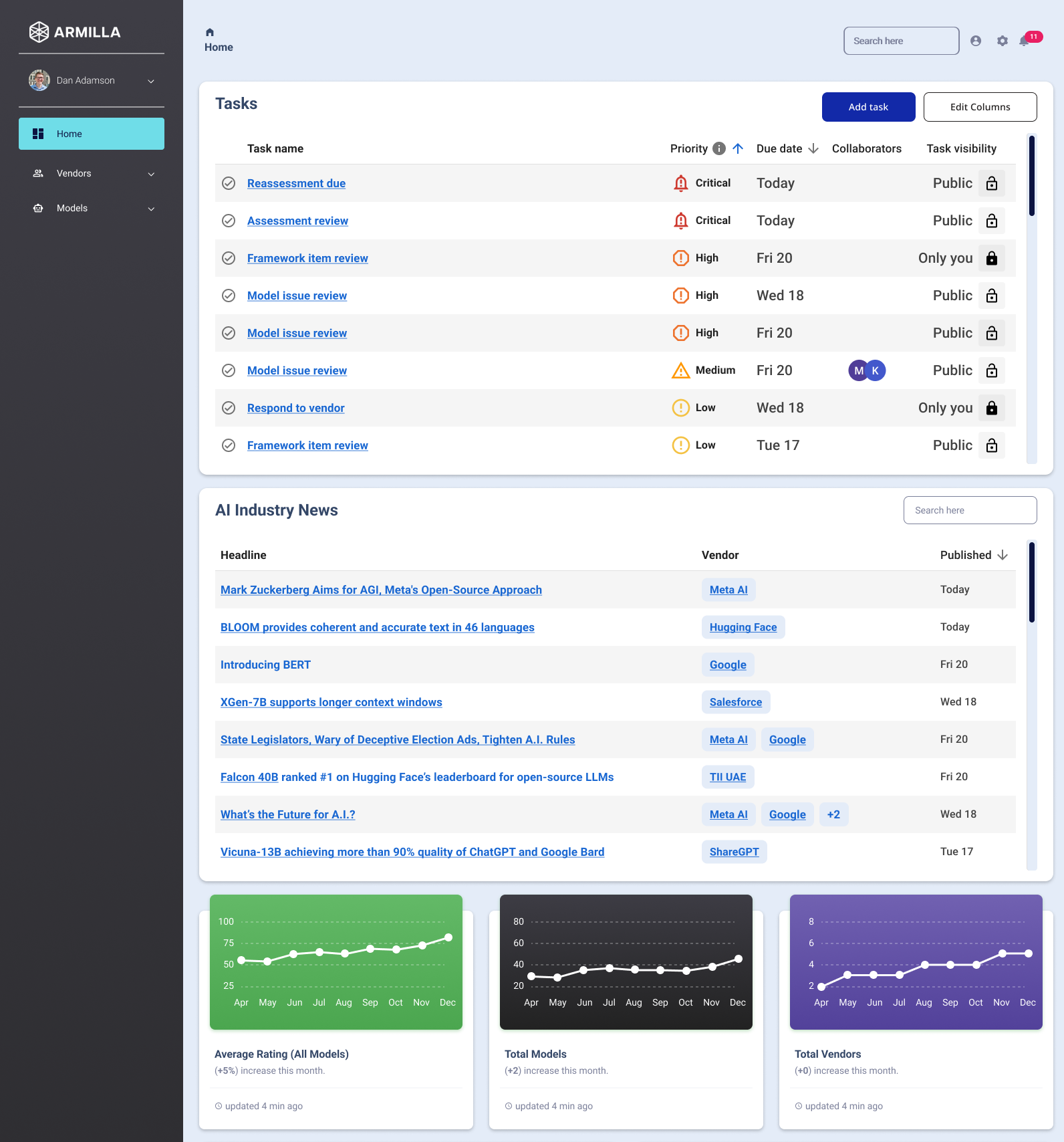

So Ramakrishnan teamed up with search algorithm expert and two-time startup founder Dan Adamson to launch Armilla AI, which provides AI model assurance to enterprise customers.

You may be wondering how Armilla can do this when most models are black boxes or gated behind licenses, subscriptions, and APIs. I had the same question. Ramakrishnan's answer was through benchmarking and a measured approach to customer acquisition.

Armilla takes models, whether open source or proprietary, and conducts assessments to “validate their quality” based on the global AI regulatory landscape. The company leverages in-house evaluation technology to test for hallucinations, racial and gender bias and fairness, general robustness and security, and more across a variety of theoretical applications and use cases.

Image credits: Armila

If the model passes review, Armilla guarantees that the purchaser of the model will receive a refund of the model usage fee.

“What we’re really providing businesses is trust in the technology that they’re sourcing from third-party AI vendors,” Ramakrishnan said. “Companies can come to us and perform an assessment of the vendors they are considering. Just like they do penetration testing for new technologies, we do penetration testing for AI. We also perform penetration tests.”

By the way, I asked Ramakrishnan if he had a model for Urmila. probably won't Testing for ethical reasons—for example, facial recognition algorithms from vendors known to work with questionable actors. He said:

“Creating assessments and reports that give false confidence in AI models that are problematic for clients and society is not only against our ethics, but also against our business model, which is based on trust. From a legal point of view, we do not intend to take on clients whose models are prohibited or prohibited by the EU, for example, some facial recognition systems or biometric classification systems. However, we will not accept applications that are in the “high risk” category, as defined by EU AI law. ”

Now, the concept of warranty and insurance coverage for AI is not new. Frankly, I was surprised by this fact. Last year, Munich Re debuted aiSure, an insurance product designed to protect against losses from potentially unreliable AI models by running the models through benchmarks similar to Armilla. In addition to warranties, a growing number of vendors, including OpenAI, Microsoft, and AWS, are offering protections against copyright infringement that may arise from the deployment of their AI tools.

But Ramakrishnan insists that Urmila's approach is unique.

“Our assessments touch on a wide range of areas including KPIs, processes, performance, data quality, qualitative and quantitative criteria, and we conduct assessments at a fraction of the cost and time,” he added. . “We are committed to complying with laws such as the EU AI Act and New York City’s AI Recruitment Bias Law (NYC Local Law 144), as well as other state regulations such as Colorado’s AI Quantitative Testing Regulations and New York State Insurance Circular. Evaluate AI models based on the requirements set forth in the Use of AI in Underwriting and Pricing. Also, evaluate AI models based on the requirements of other new regulations, such as Canada’s AI and Data Act, as they come into force. We are also prepared to carry out evaluations.”

Armilla launched insurance services in late 2023 with support from carriers Swiss Re, Greenlight Re and Chaucer, including 10 healthcare companies applying GenAI to process medical records. It claims to have as many customers as the company. Ramakrishnan said Armilla's customer base has grown two times month-on-month since Q4 2023.

“We serve two main customers: enterprises and third-party AI vendors,” said Ramakrishnan. “Companies use our guarantees to establish protection for the third-party AI vendors they source. Third-party vendors use our guarantees as a seal of approval that their products can be trusted. , which helps shorten sales cycles.”

Assurances for AI make intuitive sense. But there is also a question in my mind: Will Amira be able to keep up with rapidly changing AI policies (e.g. New York City's employment algorithm bias law, EU AI law, etc.)? Evaluations and contracts are not complete.

Mr Ramakrishnan dismissed the concerns.

“Regulations are evolving independently and rapidly in many jurisdictions, and it will be important to understand the nuances of laws around the world,” he said. There is no “one size fits all” that can be applied as a global standard, so we have to piece everything together. This is difficult, but it also has the advantage of creating a “moat” for us. ”

Headquartered in Toronto and with 13 employees, Armilla has recently partnered with Greycroft, Differential Venture Capital, Mozilla Ventures, Betaworks Ventures, MS&AD Ventures, 630 Ventures, Morgan Creek Digital, Y Combinator, Greenlight Re, and Chaucer. The total amount raised is $7 million, and Ramakrishnan said the proceeds will be used to expand Armila's existing warranties and introduce new products.

“Insurance plays the biggest role in addressing AI risks, and Amira is at the forefront of developing insurance products that enable businesses to safely deploy AI solutions,” said Ramakrishnan. said.