Deepgram has made a name for itself as one of the go-to startups for voice recognition. Today, the well-funded company announced the launch of Aura, a new real-time text-to-speech API. Aura combines highly realistic voice models with low-latency APIs to enable developers to build real-time conversational AI agents. Powered by large-scale language models (LLMs), these agents can replace customer service agents in call centers and other customer-facing settings.

As Deepgram co-founder and CEO Scott Stephenson told me, access to good voice models has been available for a long time, but they were expensive and took a long time to compute. On the other hand, low-latency models tend to sound robotic. Deepgram's Aura combines a human-like voice model that is rendered extremely fast (typically less than half a second) and, as Stevenson repeatedly pointed out, does it at a low price.

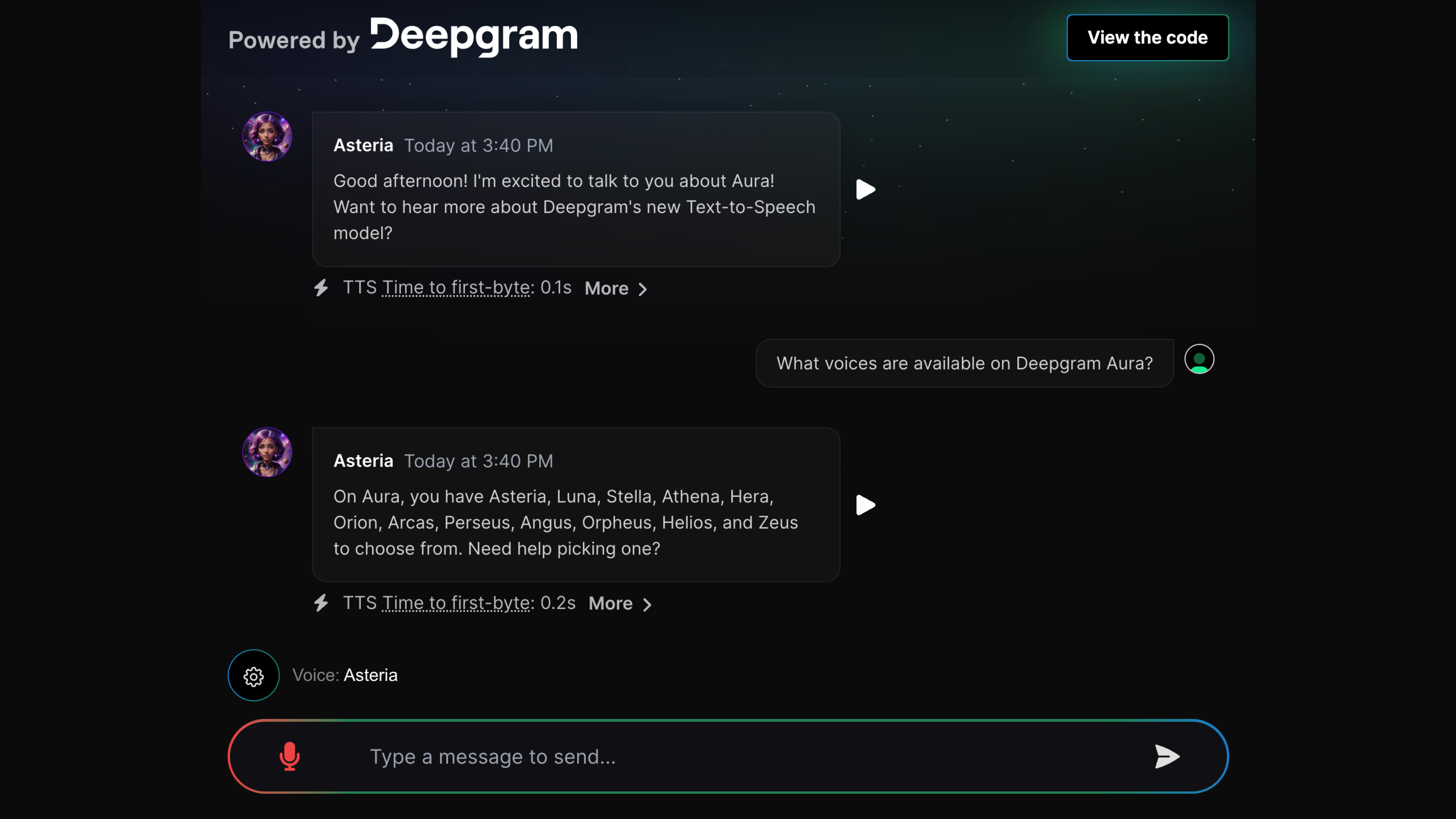

Image credit: deepglam

“Everyone now thinks, 'Hey, we need a real-time voice AI bot that can recognize what's being said, understand what's being said, and generate a response, so we can talk back,'” he said. Told. In his view, to make such products valuable to companies, accuracy (which he believes is the stakes of such services and ), low latency, and acceptable cost. .

Deepgram claims that Aura's pricing, currently at $0.015 per 1,000 characters, outperforms nearly all competitors. This isn't far off from his $0.016 per 1,000 characters price for Google's WaveNet voice and $0.016 per 1,000 characters for Amazon's Polly's Neural voice, but of course, the price is lower. However, Amazon's top tier is quite expensive.

“We need to have a really good price point for everything.” [segments]But you also need amazing latency, speed, and incredible accuracy. So this is very difficult to achieve,” Stevenson said of DeepGram's general approach to building products. “But this is what we were focused on from the beginning, and we were building the underlying infrastructure to make it happen, so we spent four years building it before we released anything. is for this reason.”

Aura currently offers approximately 12 voice models, all of which were trained by the Deepgram dataset created with voice actors. The Aura model, like the company's other models, was trained in-house. It looks like this:

You can try the Aura demo here. I've been testing this a bit, and I've run into some weird pronunciations at times, but what really stands out, in addition to Deepgram's existing high-quality speech-to-text model, is the speed. To highlight the speed of generating responses, Deepgram calculates the time it takes for the model to start speaking (typically less than 0.3 seconds) and the time it takes for the LLM to finish generating the response (typically less than 1 second). (less than).