AI-powered video generation has become a hot market following OpenAI's Sora model released last month. Deepmind alumni Yishu Miao and Ziyu Wang have publicly released Haiper, a video generation tool with a unique AI model.

Miao, who previously worked on TikTok's Global Trust & Safety team, and Wang, who has worked as a researcher at both Deepmind and Google, started working on the company in 2021 and officially incorporated it in 2022.

The two have expertise in machine learning and started working on the problem of 3D reconstruction using neural networks. After training on video data, Miao said in his conference call with TechCrunch that video generation turned out to be a more interesting problem than his 3D reconstruction. That's why Haiper decided to focus on video generation about six months ago.

Haiper has raised $13.8 million in a seed round led by Octopus Ventures with participation from 5Y Capital. Before that, angels like Jeffrey Hinton and Nando de Freitas helped the company raise $5.4 million in a pre-seed round in April 2022.

Video generation service

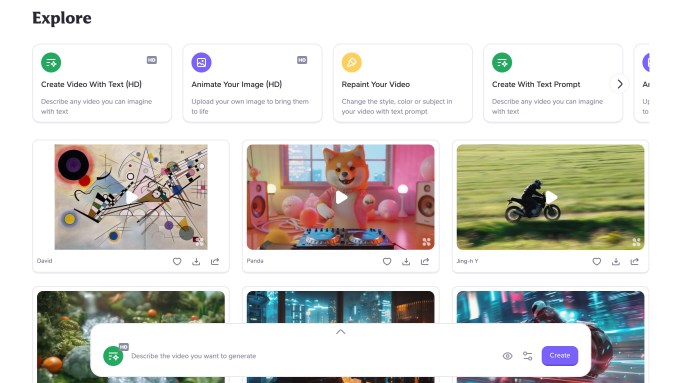

Users can visit Haiper's site and start generating videos for free by entering text prompts. However, there are certain limitations. It can only produce HD videos that are 2 seconds long and slightly lower quality videos that are up to 4 seconds long.

Image credit: hyper

The site also has the ability to animate images and redraw videos in a different style. Additionally, the company is also working on introducing features such as the ability to enhance videos.

Miao said the company aims to keep these features free to build the community. He noted that it's “too early” in the startup's journey to think about building a subscription product around video generation. However, it is working with companies like JD.com to explore commercial use cases.

I generated a sample video using one of the original Sora prompts. “Several giant woolly mammoths approach as they walk across a snowy meadow. Their long woolly mammoth fur blows lightly in the wind as they walk, painting a picture of snow-covered trees and dramatic snow-capped mountains. The mid-afternoon light in the distance, wispy clouds and the sun in the distance creates a warm glow, and a low camera perspective captures the large furry mammal with beautiful photography and depth of field. .”

Building the core video model

Haiper is currently focused on consumer websites, but wants to build a core video generation model that can be offered to other companies as well. The company has not disclosed details of this model.

Miao said he has privately reached out to a number of developers to try out the closed API. He expects developer feedback to be critical in helping companies iterate on models quickly. Haiper is also considering open sourcing the model in the future to allow people to explore different use cases.

The CEO believes it is now important to solve the uncanny valley problem (the phenomenon where seeing human-like figures generated by AI evokes eerie feelings) in video generation.

“We're not working on solving problems in the content or style areas, but fundamental problems like how an AI-generated human looks when walking or when it's snowing. “We are working on it,” he said.

The company currently has approximately 20 employees and is actively hiring for multiple roles across engineering and marketing.

upcoming competition

OpenAI's recently released Sora is probably Haiper's most popular competitor at the moment. However, there are also companies like Google and his Nvidia-backed Runway, which has raised more than $230 million in funding. Google and Meta also have their own video generation models. Last year, Stability AI announced his Stable Diffusion Video model in a research preview.

Rebecca Hunt, partner at Octopus Venture, believes that over the next three years, Haiper will need to build a strong video generation model to achieve differentiation in this market.

“Realistically, only a handful of people are in a position to achieve this. This is one of the reasons we wanted to support the Haiper team. “Once we get to a point where all of that physics is reflected, there will come a time when the applications will be limitless,” she told TechCrunch via email.

While investors are considering investing in AI-powered video generation startups, they also believe the technology still has room for improvement.

“AI video feels like it's at the GPT-2 level. We've made great strides in the last year, but we still have a long way to go before everyday consumers can use these products on a daily basis. There's a way to go. When will “ChatGPT Moments” appear on video? a16z's Justin Moore wrote last year: