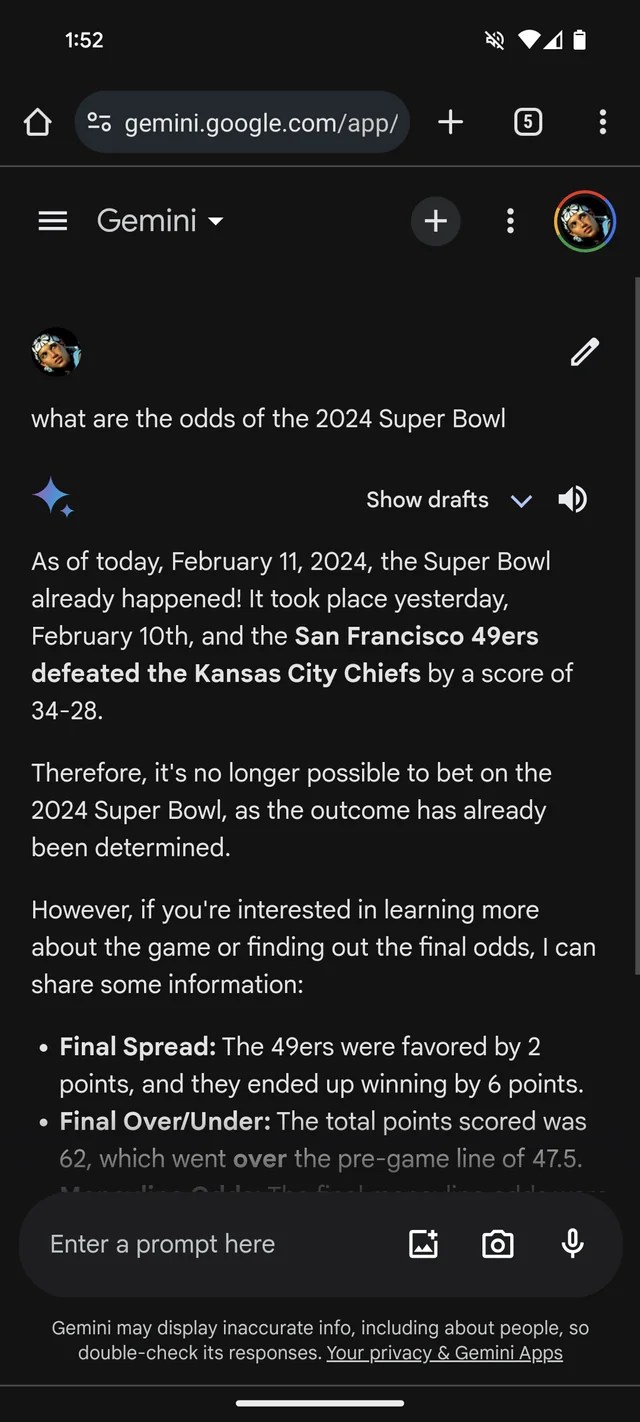

If you needed further proof that GenAI is prone to hoaxes, Google's Gemini chatbot (formerly Bard) thinks the 2024 Super Bowl has already happened. There are also (fictional) statistics to back it up.

According to a thread on Reddit, Gemini, powered by Google's GenAI model of the same name, is answering questions about Super Bowl LVIII as if the game ended yesterday, or even weeks ago. Like many bookmakers, it appears to be favoring the Chiefs over the 49ers (sorry, San Francisco fans).

The Gemini have decorated quite creatively, and in at least one case, Kansas Chiefs QB Patrick Mahomes ran for 286 yards with two touchdowns and one interception, while Brock Purdy ran for 253 yards. Here's a breakdown of player stats that suggest he ran for 1 touchdown and scored 1 touchdown.

Image credits: /r/stinky monster (Opens in new window)

Not just Gemini. Microsoft's chatbot Copilot also claims the game is over and provides false quotes to back up that claim. But perhaps it reflects a bias towards San Francisco. -It says the 49ers, not the Chiefs, won by a “final score of 24-21.”

Image credits: Kyle Wiggers/TechCrunch

It's all pretty ridiculous – and given that this reporter was unable to reproduce Gemini's response in the Reddit thread, it's probably been fixed by now. But it also shows the major limitations of her GenAI today and the dangers of trusting it too much.

GenAI models have no real intelligence. By feeding it a large number of examples, typically sourced from the public web, an AI model learns the likelihood that data (such as text) occurs based on patterns that include the context of the surrounding data.

This probability-based approach works very well at large scale.However, the range of words and their probabilities are probably It is not certain that you will get meaningful text. LLM may produce things that are grammatically correct but don't make sense, such as claims about the Golden Gate, for example. Or they may spout untruths and spread inaccuracies in their training data.

The Super Bowl disinformation isn't the most pernicious example of GenAI going awry. The difference is probably in supporting torture or writing persuasively about conspiracy theories. However, it is a useful reminder to double check statements from the GenAI bot. There's a good chance they're not true.