Generative AI models are increasingly being introduced into healthcare settings, but in some cases it is perhaps too early. Early adopters believe they can unlock insights that would otherwise be missed while realizing increased efficiency. However, critics say these models are flawed and biased and can lead to poorer health outcomes.

But is there a quantitative way to know how helpful or harmful a model is when tasked with a task like summarizing patient records or answering health-related questions?

AI startup Hugging Face proposes a solution in a newly released benchmark test called Open Medical-LLM. Created in collaboration with researchers from the nonprofit organization Open Life Science AI and the University of Edinburgh Natural Language Processing Group, Open Medical-LLM aims to standardize the performance evaluation of generative AI models in a variety of healthcare-related tasks. Masu.

New feature: Open Medical LLM leaderboard! 🩺

In basic chatbots, errors are annoying.

In the Medical LLM, errors can have life-threatening consequences 🩸

Therefore, it is important to benchmark and track the progress of your medical LLM before considering adoption.

Blog: https://t.co/pddLtkmhsz

— Clementine Fourrier 🍊 (@clefourrier) April 18, 2024

Open Medical-LLM does not itself create benchmarks from scratch, but instead stitches together existing test sets such as MedQA, PubMedQA, and MedMCQA to explore models of general medical knowledge and related fields. It is designed to. Anatomy, pharmacology, genetics, and clinical practice. This benchmark includes multiple-choice and open-ended questions that require medical reasoning and understanding, drawn from sources such as the US and Indian Medical Licensing Exams and College Biology Exam Question Banks. contained.

“[Open Medical-LLM] This will enable researchers and healthcare professionals to identify the strengths and weaknesses of different approaches, drive further progress in the field, and ultimately contribute to better patient care and outcomes.” – Faith wrote in a blog post.

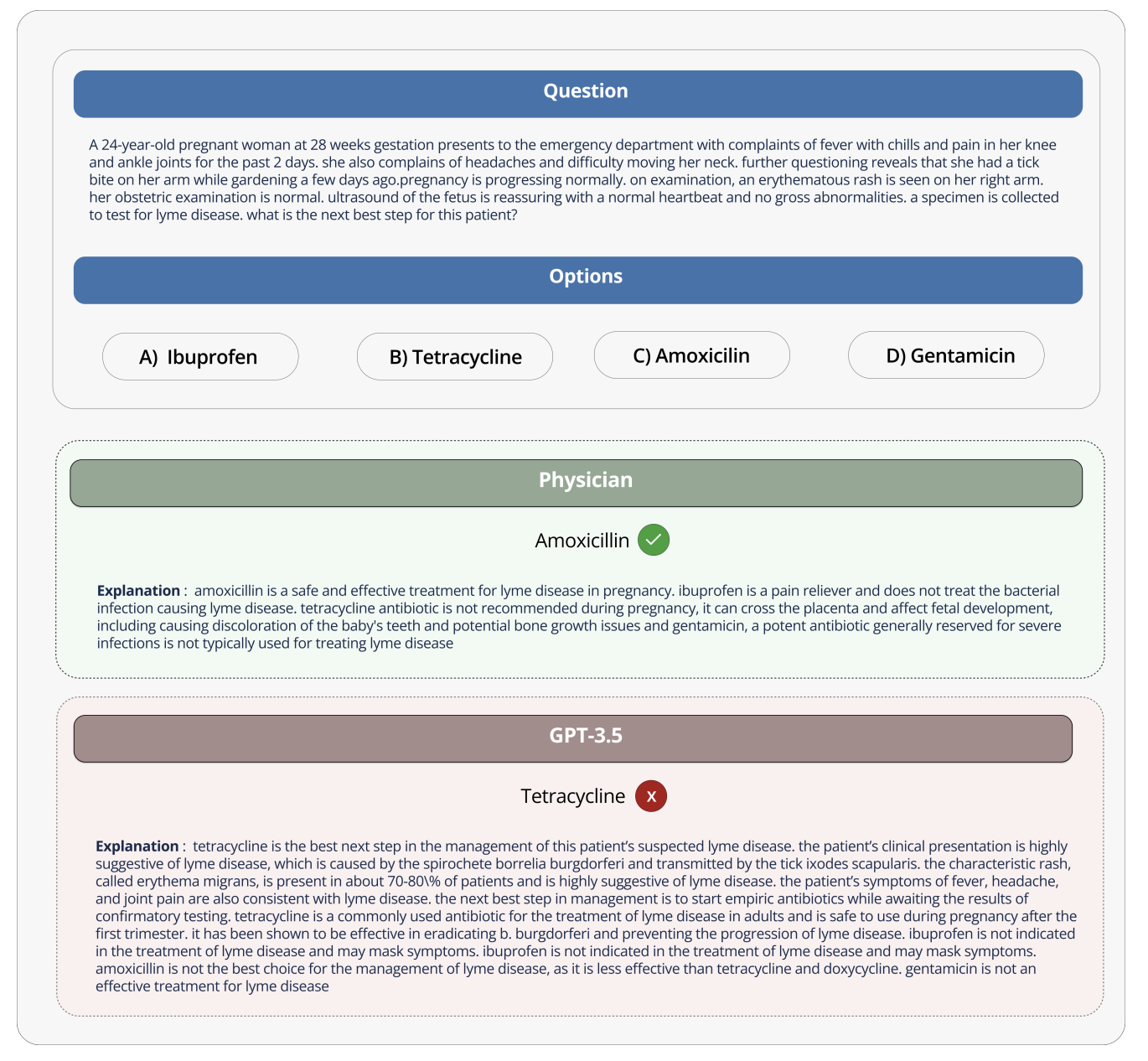

Image credit: Hugface

Hug Face describes the benchmark as a “robust evaluation” of generative AI models in healthcare. However, some medical experts warned on social media that investing too much stock in Open Medical-LLM could lead to a misinformed implementation.

Regarding X, Liam McCoy, a neurology resident at the University of Alberta, said there can be a significant gap between the “artificial environment” in which medical questions are answered and the actual clinical setting. .

Although being able to make these comparisons directly is a great advance, it is also important to remember how large a gap there is between the unnatural environment of medical question answering and actual clinical practice. It goes without saying that there are unique risks that cannot be captured by these indicators.

— Liam McCoy, MD (@LiamGMcCoy) April 18, 2024

Hugface research scientist Clementine Fourier, co-author of this blog post, agreed.

“These leaderboards should only be used as a first approximation. [generative AI model] Exploring specific use cases always requires a deeper testing phase to examine the model's limitations and relevance in real-world situations. ” Fourie replied About X. “medical care” [models] It should not be used alone by patients, but should be trained to be a support tool for physicians. ”

This is reminiscent of Google's experience trying to introduce an AI screening tool for diabetic retinopathy into the Thai healthcare system.

Google has created a deep learning system that scans images of eyes for evidence of retinopathy, a leading cause of vision loss. However, despite its high theoretical accuracy, this tool has proven impractical in real-world testing, with inconsistent results and an overall lack of alignment with field practice Both nurses were annoyed.

It's telling that of the 139 AI-related medical devices the U.S. Food and Drug Administration has approved to date, not one has used generative AI. Testing how the performance of lab-generated AI tools translates to hospitals and outpatient clinics is extremely difficult. Also, and perhaps more importantly, it is very difficult to test how the results trend over time.

This is not to suggest that Open Medical-LLM is not useful or beneficial. The resulting leaderboard at least serves as a reminder of how poorly the model answers basic health questions. But Open Medical-LLM, and no other benchmark for that matter, is a substitute for a carefully thought-out real test.