India has long struggled to deploy technology to persuade its citizens, but it is one of the world's hot spots when it comes to how AI is used and abused in political debates, especially democratic processes. It's a spot. The technology company that originally built the tool is visiting the country to promote the solution.

Earlier this year, Andy Parsons, Adobe's senior director who oversees the cross-industry Content Authenticity Initiative (CAI) engagement, traveled to India to visit media and technology organizations in the country, and was among the I stepped into it. AI facilitates tools that can be integrated into his workflow to identify and flag content.

“Rather than discerning what is fake or manipulated, we as a society, and this is also an international concern, should start declaring authenticity, meaning that something is not known to consumers,” he said in an interview. “It means declaring whether something that should be produced is generated by AI or not.”

Parsons added that some Indian companies that are not currently part of the Munich AI Election Security Agreement signed in February by OpenAI, Adobe, Google and Amazon intend to build similar partnerships in the country.

“Laws are very difficult. It is unreliable to assume that governments in any jurisdiction will legislate accurately and quickly. Governments take a very steady approach and take their time. It is better to work on it.”

It is well known that detection tools are inconsistent, but these are, or are argued to be, the beginning of fixing some of the problems.

“The concept is already well understood,” he said during a visit to Delhi. “What I'm helping to do is raise awareness that the tools are ready too. It's not just an idea. This is something that's already in place.”

Andy Parsons, Senior Director, Adobe.Image credit: Adobe

CAI promotes royalty-free, open standards for identifying whether digital content is machine- or human-generated and predates the current hype around generative AI. . CAI was founded in 2019 and currently has 2,500 members including Microsoft, Meta, and Google. , New York Times, Wall Street Journal, BBC.

Just as there is an industry growing around the business of leveraging AI to create media, a small industry has also been created to try to fix some of its more nefarious applications.

So in February 2021, Adobe took a step further in building one of these standards in-house, joining forces with ARM, BBC, Intel, Microsoft, and Truepic to form the Coalition for Content Provenance and Authenticity (C2PA). Established. The coalition leverages metadata on images, video, text, and other media to highlight their origins, letting people know where their files came from, where and when they were generated, and whether they were modified before they arrived. We aim to develop open standards to communicate. user. CAI works with his C2PA to promote standards and make them available to the public.

The company is currently actively working with governments such as India to expand the adoption of its standards to highlight the provenance of AI content, and is collaborating with authorities to develop guidelines for the advancement of AI.

Adobe has nothing to lose by being in this game. The company hasn't yet acquired or built its own large-scale language model, but it's a market leader in tools for the creative community, home to apps like Photoshop and Lightroom, so it's building new products like Firefly. Not only is AI content native, but legacy products are also infused with AI. If the market develops as some predict, AI will become a must-have if Adobe wants to stay on top. If regulators (or common sense) have their way, Adobe's future may depend on how successful it is in ensuring that what it sells doesn't contribute to disruption.

In any case, the overall picture of India is really confusing.

Google has focused on India as a testbed for how to ban the use of its generated AI tool Gemini when it comes to election content. The parties are weaponizing his AI and creating memes using caricatures of their adversaries. Meta has set up a deepfake “helpline” for WhatsApp. This includes the popularity of messaging platforms in spreading AI-powered messages. And as countries become increasingly wary of the safety of AI and what they must do to ensure it, the Indian government eased rules on how new AI models are built in March. We will need to see what impact this decision will have. , tested and deployed. In any case, it is certainly intended to further promote AI activities.

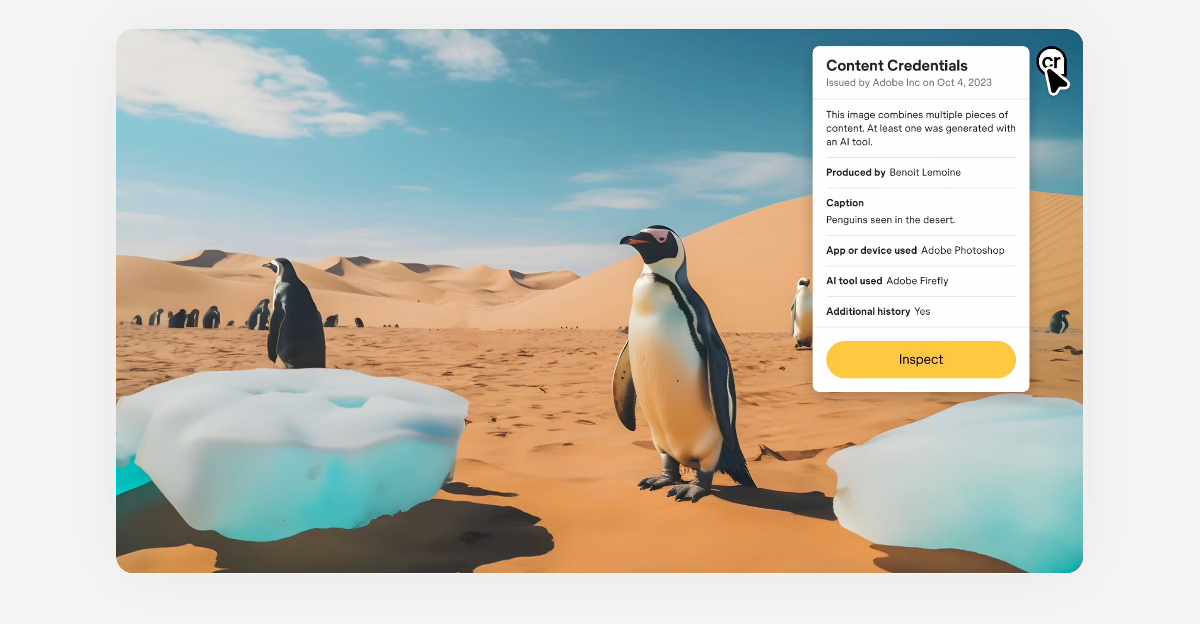

C2PA used open standards to develop content digital nutrition labels called Content Credentials. CAI members are working to introduce digital watermarks to content to let users know its origin and whether it was generated by AI. Adobe has content credentials across its creative tools, including Photoshop and Lightroom. It is also automatically attached to AI content generated by Adobe's AI model Firefly. Last year, Leica launched a camera with built-in content credentials, and Microsoft added content credentials to all AI-generated images created using his Bing Image Creator.

Image credit: Content Credentials

Parsons told TechCrunch that CAI is in discussions with world governments on two areas. One is to help promote the standard as an international standard, and the other is to adopt it.

“In an election year, it's important for candidates, political parties, incumbent governments and administrations, who are always releasing material to the media and the public, to ensure that they know when something is announced by the Prime Minister. is particularly important.” [Narendra] It's from PM Modi's office, actually PM Modi's office. There are many cases where this is not the case. It is therefore very important for consumers, fact checkers, platforms and intermediaries to understand that something is truly authentic,” he said.

India's large population, vast language and demographic diversity make it difficult to curb misinformation, he added, and he voted for a simple label to combat it.

“It's a bit of a 'CR'…two Western characters like most Adobe tools, but it shows there's more context to see,” he said.

Controversy continues over what the real point behind tech companies supporting all kinds of AI safety measures is. Is it really about existential concerns, or are they just sitting at the table to impress existential concerns, and will their interests be protected in the rule-making process?

“It's generally not controversial among the companies involved. All the companies that signed the recent Munich Agreement stopped competitive pressure, including Adobe, which came together because these ideas are important to us all. “Because it has to be done,” he defended. of the work.