Will generative AI designed for the enterprise (AI that auto-completes formulas in reports, spreadsheets, etc.) ever become interoperable? Together with related organizations like Cloudera and Intel, we are supporting and supporting a growing number of open source efforts. The Linux Foundation, a nonprofit organization that sustains it, aims to find out.

The Linux Foundation today announced the launch of Open Platform for Enterprise AI (OPEA). This is a project that fosters the development of open, multi-provider, configurable (i.e. modular) generative AI systems. Under the Linux Foundation's LFAI and Data organization, which focuses on AI and data-related platform initiatives, OPEA's goal is to pave the way for the release of “enhanced” and “scalable” generative AI systems. is. It is the best open source innovation from across the ecosystem,” said Ibrahim Haddad, director of LFAI and his data executive, in a press release.

“OPEA will unlock new possibilities for AI by creating a detailed, configurable framework at the forefront of the technology stack,” Haddad said. “This initiative is a testament to our mission to advance open source innovation and collaboration within the AI and data community under a neutral and open governance model.”

In addition to Cloudera and Intel, OPEA, one of the Linux Foundation's sandbox projects and an incubator program, includes companies such as Intel, IBM-owned Red Hat, Hugging Face, Domino Data Lab, MariaDB, and VMWare. Its members include leading companies.

So what exactly can they build together? Haddad calls for “optimized” support for AI toolchains and compilers that allow AI workloads to run across different hardware components. , and a “heterogeneous” pipeline for search augmentation generation (RAG).

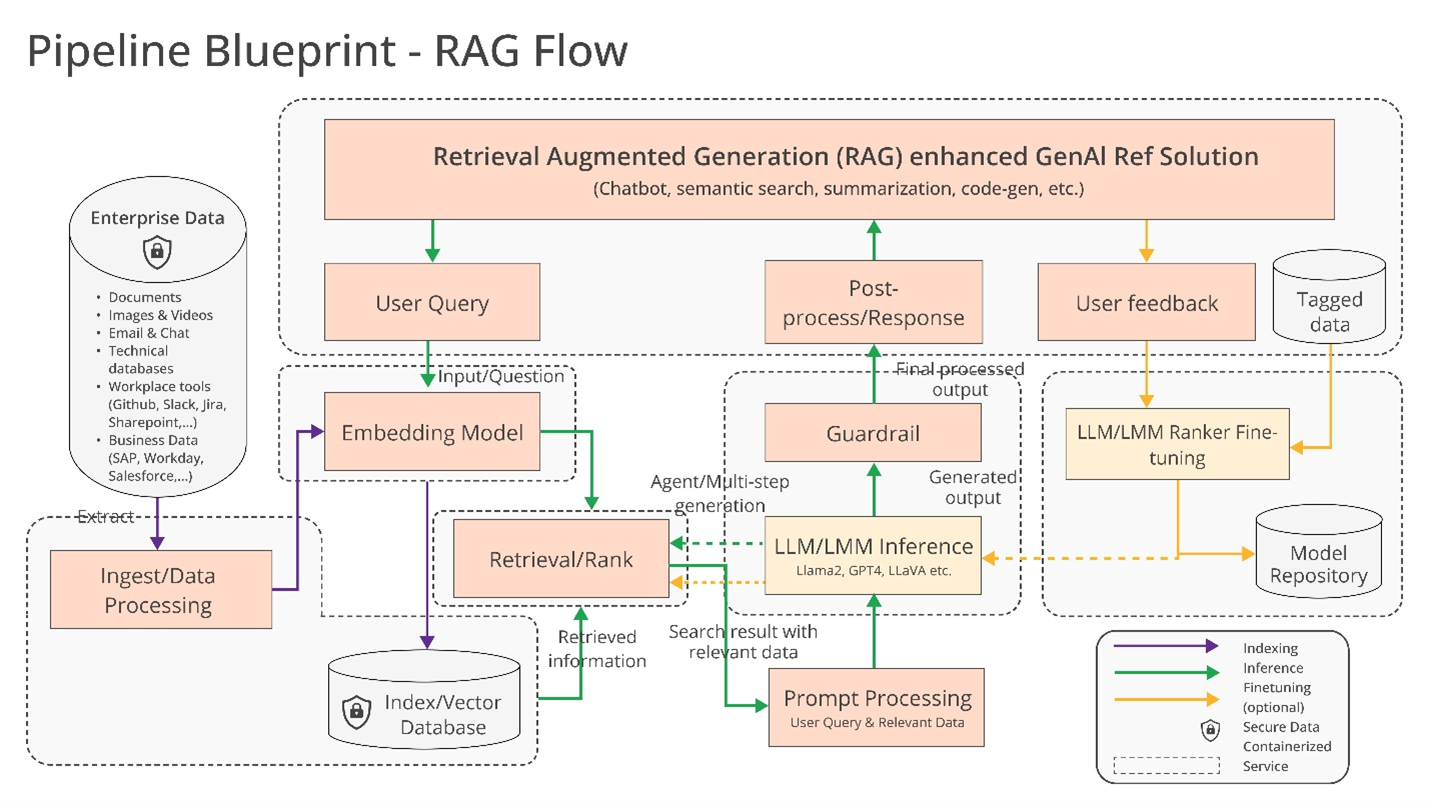

RAGs are becoming increasingly popular in enterprise applications of generative AI, and it's not hard to see why. Most generative AI models' answers and actions are limited to the data used to train them. However, RAGs allow you to extend the model's knowledge base to information beyond the original training data. The RAG model references this external information (which may take the form of company-proprietary data, public databases, or a combination of the two) before generating a response or performing a task.

A diagram explaining the RAG model.

Intel provided some more details in its own press release.

Companies are taking on a do-it-yourself approach [to RAG] This is because there is no de facto standard across components that allows companies to select and deploy RAG solutions that are open, interoperable, and help them get to market quickly. OPEA plans to address these issues by working with industry to standardize components such as frameworks, architectural blueprints, and reference solutions.

Evaluation will also be an important part of OPEA's efforts.

In its GitHub repository, OPEA proposes a rubric for evaluating generative AI systems along four axes: performance, functionality, reliability, and “enterprise-grade” readiness. Performance, as defined by OPEA, pertains to “black box” benchmarks from real-world use cases. Features are evaluations of system interoperability, deployment options, and ease of use. Trustworthiness focuses on the “robustness” and ability to ensure the quality of an AI model. Additionally, enterprise readiness focuses on the requirements to avoid critical issues and keep the system up and running.

Rachel Roumeliotis, director of open source strategy at Intel, said OPEA will work with the open source community to provide rubric-based testing and provide evaluation and grading of generative AI deployments upon request.

OPEA's other initiatives are still undecided at this time. However, Haddad hinted at the possibility of open model development along the lines of his expanding Llama family at Meta and his DBRX at Databricks. To that end, Intel has already provided reference implementations of generative AI-powered chatbots, document summarizers, and code generators optimized for Xeon 6 and Gaudi 2 hardware in his OPEA repository. I am.

Currently, OPEA members are clearly invested (and self-serving, for that matter) in building tools for enterprise-generated AI. Cloudera recently launched a partnership to build what it calls an “AI ecosystem” in the cloud. Domino provides a set of apps for building and auditing generative AI for your business. And VMWare, which leans toward the infrastructure side of enterprise AI, announced a new “private AI” computing product last August.

The question is whether, under OPEA, these vendors will actually work together to build mutually compatible AI tools.

There are clear advantages to doing so. Customers are willing to use multiple vendors depending on their needs, resources, and budget. But as history has shown, it's very easy to fall into vendor lock-in. I hope that's not the final outcome.