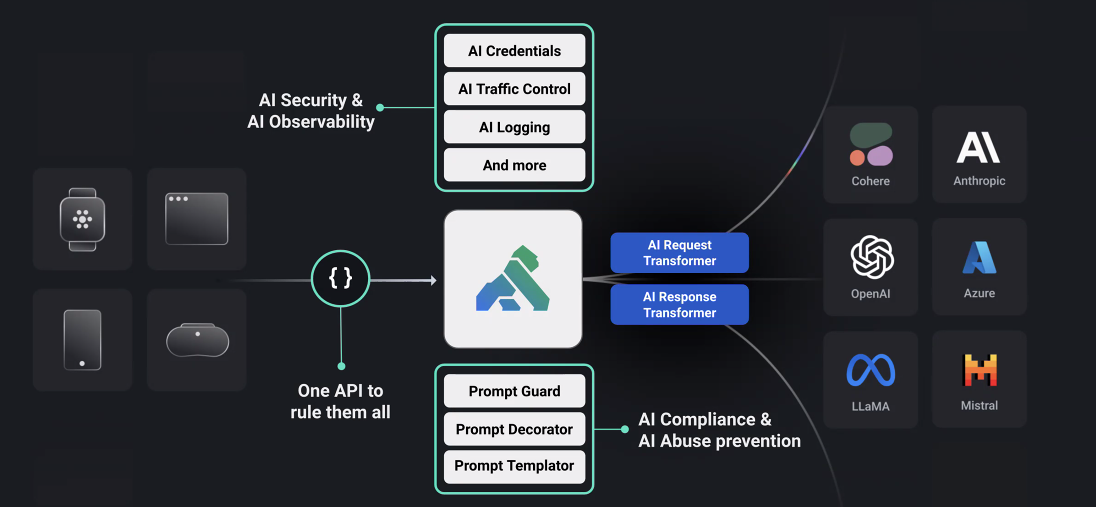

API company Kong today announces an open source AI gateway. It is an extension to the existing API Gateway that allows developers and operations teams to integrate their applications with one or more Large Language Models (LLMs) and access them through a single API. In addition to this, Kong will release several AI-specific features such as prompt engineering, credential management, etc.

“We see AI as an additional use case for the API,” Kong co-founder and CTO Marco Palladino told me. “APIs are driven by use cases like mobile, microservices, and AI just happens to be the newest thing. When we look at AI, we're looking at APIs everywhere. We're looking at APIs for consuming AI, APIs for fine-tuning AI, and AI itself. […] Therefore, the more AI we have, the more APIs we will use in the world. ”

Palladino argues that while nearly every organization is considering how to use AI, they also fear data leakage. Eventually, he believes these companies will run models locally and use the cloud as a fallback. But in the meantime, these companies also need to find ways to manage credentials to access cloud-based models, control and log traffic, manage quotas, and more.

“With API Gateway, we are building a product that allows developers to be more productive when building on AI by allowing them to leverage one or more LLM providers without changing their code. “We wanted to provide that,” he said. This gateway currently supports Anthropic, Azure, Cohere, Meta's LLaMA model, Mistral, and OpenAI.

The Kong team claims that most other API providers currently manage AI APIs the same way they manage other APIs. However, the Kong team believes that layering these AI-specific additions on top of the API will enable new use cases (or at least make existing use cases easier to implement). The AI Response Transformer and Request Transformer, part of the new AI Gateway, allow developers to modify prompts and their results on the fly, automatically translating prompts or removing personally identifiable information. can.

Prompt engineering is also deeply integrated into the gateway, allowing companies to apply guidelines based on these models. This also means there is a central point for managing these guidelines and prompts.

It's been almost nine years since we launched the Kong API management platform. At the time, the company that is now Kong was still known as Mashape, but as Mashape/Kong co-founder and CEO Augusto Marietti told me in an interview earlier this week, this was pretty much the last It was an effort. “Mashape went nowhere, but Kong became the number one API product on his GitHub,” he said. Marietti noted that it worked out very well because Kong was cash flow positive last quarter and is not currently seeking capital.

The Kong gateway is now the core of the company's platform and is also powering its new AI gateway. In fact, current Kong users will be able to access all new AI features simply by upgrading their current installation.

At this time, these new AI capabilities are available for free. Kong also plans to release paid premium features over time, but the team emphasized that this is not the goal of this release.