Meta has released Llama 3, the latest in the Llama series of open source generative AI models. More precisely, the company has open sourced two of his models of the new Llama 3 family, and the remaining models are expected to be released in an unspecified future.

Mehta said the new model Llama 3 8B contains 8 billion parameters, Llama 3 70B contains 70 billion parameters, and the previous generation Llama models Llama 2 8B and Llama 2 70B. He described it as a “huge improvement” compared to the previous. In terms of performance. (Parameters essentially define an AI model's skill at a problem, such as analyzing or generating text. Models with more parameters are generally more capable than models with fewer parameters.) In fact, Meta says: Llama 3 8B and Llama 3 70B are two custom-built Llama 3 8B and Llama 3 70B, each trained on a 24,000 GPU cluster and are among the highest performing generative AI models currently available. That's one.

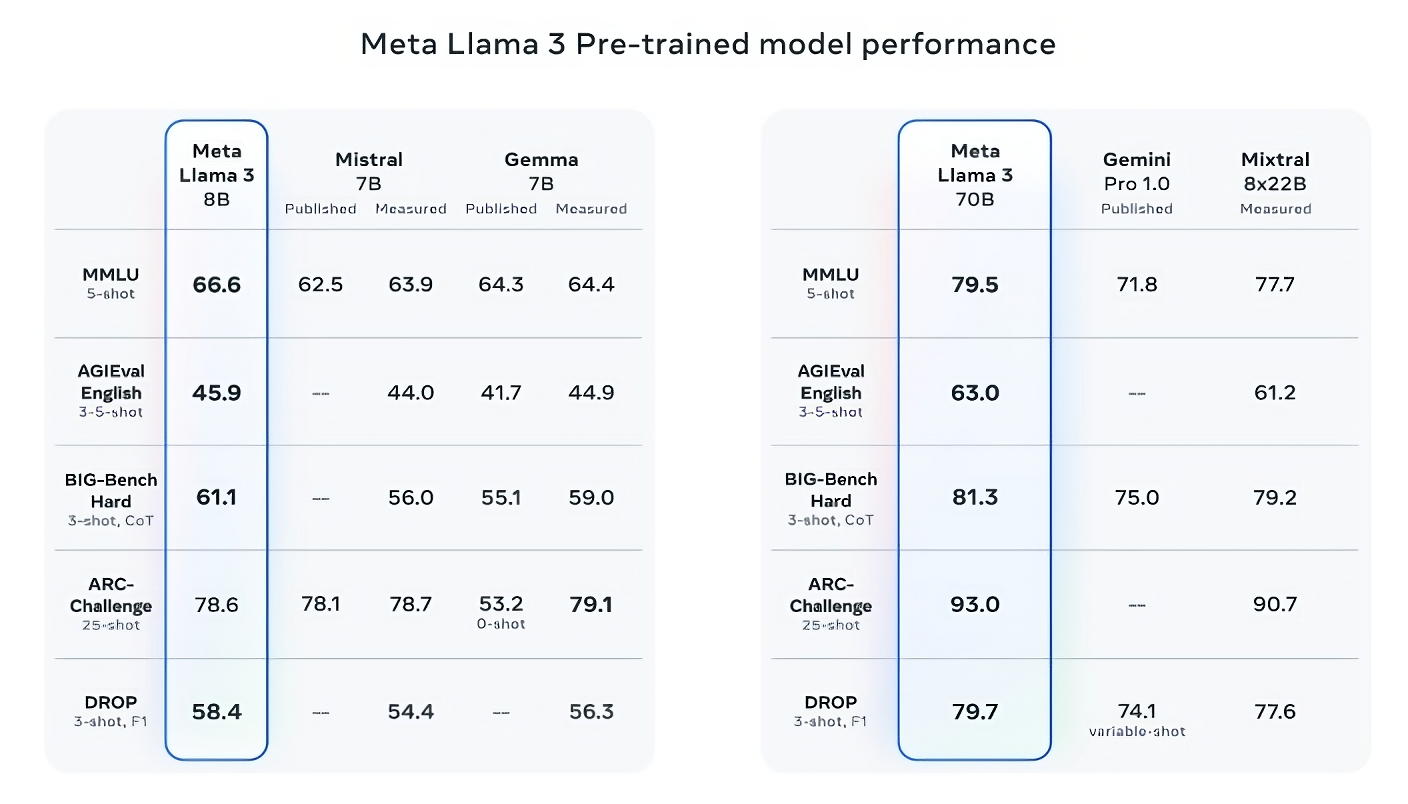

That's quite a claim. So how does Meta support that? Well, the company offers MMLU (attempts to measure knowledge), ARC (attempts to measure skill acquisition), and DROP (attempts to measure model acquisition over chunks of text). We point out the Llama 3 model's scores on common AI benchmarks such as Testing Inference. As I've written before, the usefulness and validity of these benchmarks is debatable. But for better or worse, these are still one of the few standardized ways that AI players like Meta evaluate models.

Llama 3 8B outperforms other open source models such as Mistral's Mistral 7B and Google's Gemma 7B in at least nine benchmarks: MMLU, ARC, DROP, and GPQA (a set of biology, physics, and chemistry) . Both of these models contain 7 billion parameters. Related Questions), HumanEval (Code Generation Test), GSM-8K (Math Word Problems), MATH (Another Math Benchmark), AGIEval (Problem Solving Test Set), and BIG-Bench Hard (Common Sense Reasoning Assessment).

Currently, the Mistral 7B and Gemma 7B aren't exactly at the forefront (Mistral 7B was released last September), and in some benchmarks cited by Meta, the Llama 3 8B scores lower than either. It's only a few percent higher. But Meta also claims that its more parameter-rich Llama 3 model, the Llama 3 70B, can compete with its flagship generative AI models, including Gemini 1.5 Pro, the latest addition to Google's Gemini series.

Image credit: Meta

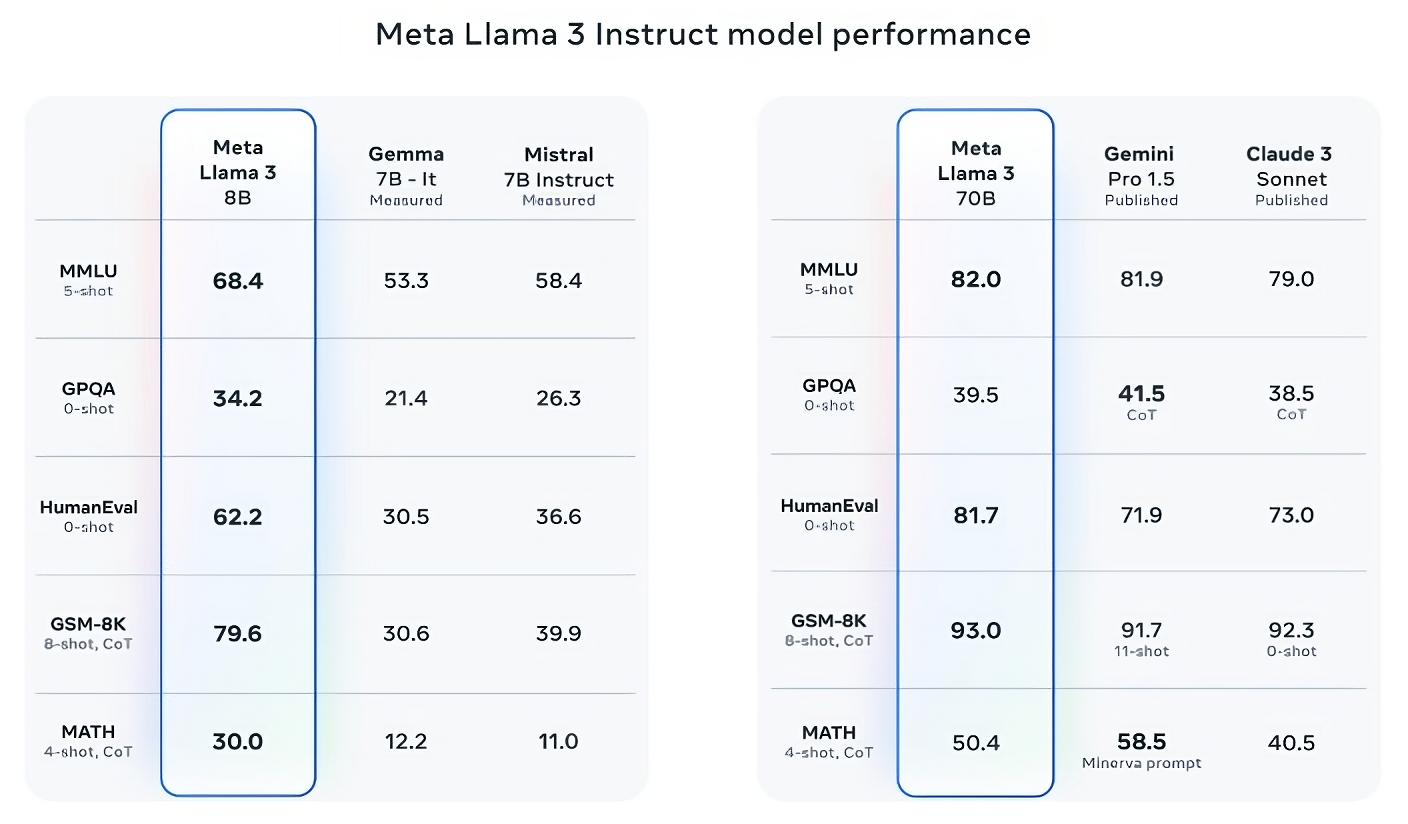

Llama 3 70B outperforms Gemini 1.5 Pro in MMLU, HumanEval, and GSM-8K, and falls short of Anthropic's most capable model, Claude 3 Opus, but Llama 3 70B is the weakest model in the Claude 3 series. It has a better score than Claude 3. Sonnet, 5 benchmarks (MMLU, GPQA, HumanEval, GSM-8K, and MATH).

Image credit: Meta

For what it's worth, Meta has also developed its own set of tests that cover use cases from coding and writing to inference and summarization. And surprisingly! — Llama 3 70B came out on top against Mistral's Mistral Medium model, OpenAI's GPT-3.5, and Claude Sonnet. Meta says it has prevented its modeling team from accessing the set to maintain objectivity, but clearly – given that Meta devised the test itself – the results should be taken with a grain of salt. There is.

Image credit: Meta

More qualitatively, Mehta said users of the new Llama model will enjoy greater “usability”, a lower chance of refusing to answer questions, trivia questions, STEM fields such as history, engineering, science, and general It states that we should expect improved accuracy in coding questions. Recommendations. This is partly due to a much larger data set. A collection of 15 trillion tokens, or a mind-boggling collection of approximately 750 million words, 7 times larger than the Llama 2 training set. (In the AI field, “tokens” refer to bits of raw data, like the syllables “fan,” “tas,” and “tic” in the word “fantastic.”)

Where does this data come from? Good question. Meta did not explicitly say that it was drawn from “publicly available sources,” that it contained four times as much code as the Llama 2 training dataset, and that 5% of that set contained non-English data (approximately 30 languages). ) is included. To improve performance in languages other than English. Meta also said that he created a lengthy document for training Llama 3 models using synthetic data, i.e. data generated by AI, but this is somewhat controversial due to potential performance drawbacks. It is an approach that fosters

“While the models we are releasing today are only fine-tuned for English output, the increased diversity of the data means that the models can better recognize nuances and patterns and perform more powerfully across a variety of tasks. ,” Meta wrote in a blog post shared with TechCrunch.

Many generative AI vendors view training data as a competitive advantage, so they hold the data and associated information close to their chests. However, the details of training data are also a potential source of IP-related litigation, which also prevents much from being revealed. In an effort to keep pace with its AI rivals, Meta was at one point using copyrighted e-books for AI training, despite warnings from its own lawyers, according to recent reports. It became clear. Meta and OpenAI are the subject of an ongoing lawsuit brought by authors including comedian Sarah Silverman over allegations that the vendors misused copyrighted data for training.

So what about toxicity and bias, two other common problems with generative AI models (including Llama 2)? Has Llama 3 improved in those areas? Yes, meta claims To do.

Meta has developed a new data filtering pipeline to improve the quality of model training data, and to prevent the exploitation of unwanted text generation from Llama with Llama Guard and Cyber, a pair of generation AI safety suites. It states that secEval has been updated. 3 models and more. The company is also releasing a new tool, Code Shield, designed to detect code in generated AI models that could introduce security vulnerabilities.

However, filtering is not foolproof. Tools like Llama Guard, CybersecEval, and Code Shield have their limitations. (See: Llama 2 has a tendency to fabricate answers to questions and divulge personal health and financial information.) We have tested the Llama 3 model in real-world settings, including tests by academics on different benchmarks. You'll have to wait and see if it works.

According to Meta, the Llama 3 model is currently available for download and powers Meta's Meta AI assistant on Facebook, Instagram, WhatsApp, Messenger, and the web, and will soon be available in a managed format across a wide range of cloud platforms, including AWS. It will be hosted. Databricks, Google Cloud, Hugging Face, Kaggle, IBM's WatsonX, Microsoft Azure, Nvidia's NIM, Snowflake. In the future, we will also offer versions of models optimized for hardware from AMD, AWS, Dell, Intel, Nvidia, and Qualcomm.

A more advanced model is also planned to be released.

Meta said it is currently training an Llama 3 model with a size of over 400 billion parameters. The model will have the ability to “speak in multiple languages”, ingest more data, and understand images and other modalities in addition to text, making the Llama 3 series possible. Along with open releases like Hugging Face's Idefics2.

Image credit: Meta

“Our goals for the near future are to make Llama 3 multilingual and multimodal, have longer contexts, and continue to improve overall performance across cores. [large language model] They have abilities such as reasoning and coding,” Mehta wrote in a blog post. “There’s a lot more to come.”

surely.