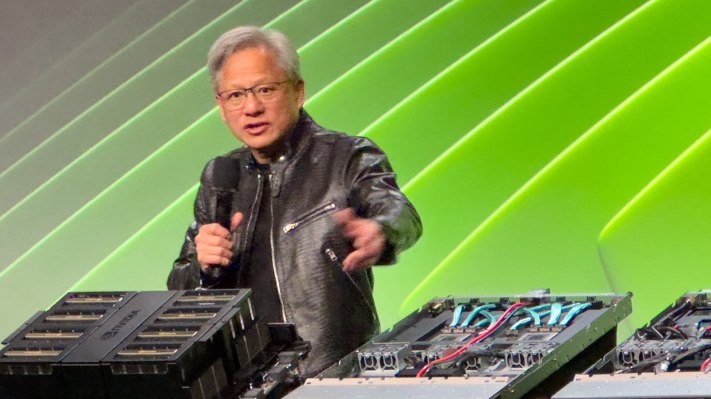

Artificial general intelligence (AGI), often referred to as “strong AI,” “full AI,” “human-level AI,” or “general intelligent action,” represents a major future leap forward in the field of artificial intelligence. I am. Unlike narrow AI that specializes in specific tasks (such as detecting product defects, summarizing news, or building websites), AGI will be able to perform a wide range of cognitive tasks at or above the human level. Addressing the press this week at NVIDIA's annual GTC developer conference, his CEO Jensen Huang seemed genuinely tired of discussing the subject. Especially, he says, because he found himself being misquoted a lot.

The frequency of this question makes sense. This concept raises existential questions about humanity's role and control in a future where machines can outthink, learn, and outperform humans in almost every domain. At the heart of this concern is the unpredictability of AGI's decision-making processes and goals, which may not align with human values and priorities (a concept that has been deeply explored in science fiction since at least the 1940s) . There are concerns that once AGI reaches a certain level of autonomy and capability, it may become impossible to contain or control, leading to scenarios in which its behavior cannot be predicted or reversed.

When sensational news outlets ask for a deadline, they often lure AI experts into setting a deadline for the end of humanity, or at least the status quo. Needless to say, AI CEOs aren't always enthusiastic about this topic.

However, Huang took the time to share his thoughts on the subject with reporters. Whether you can predict when his passable AGI will emerge depends on how you define AGI, Huang argues, and draws some similarities. Even if the time zones are complicated, he can tell when the new year ends and 2025 begins. If you're driving to the San Jose Convention Center (where this year's GTC conference will be held), you usually know you've arrived when you see the giant GTC banner. The important thing is that we can agree on how to measure arriving where you wanted to go, either temporally or geospatially.

“If you specify AGI as something very specific, a set of tests on which a software program can perform very well, or perhaps 8% better than most people, within five years. I think we can get there,” Huang explains. He suggests that the test could be the ability to pass a law bar exam, a logic exam, an economics exam, or perhaps a pre-medical exam. I'm not going to make a prediction unless the questioner can explain very specifically what AGI means in the context of the question. fair enough.

AI hallucinations can be solved

During a question-and-answer session on Tuesday, Huang was asked what to do about AI hallucinations, the tendency for some AIs to fabricate answers that sound plausible but are not based in fact. He was clearly irritated by the question and suggested that the hallucinations could be easily resolved by checking the well-researched answers.

“Add a rule: For every answer, you have to search for the answer,” Huang says, calling this practice “search augmentation generation,” an approach very similar to basic media literacy. I will explain. context. Compare the facts contained in the source with known truths, and if the answer is even partially factually inaccurate, discard the entire source and move on to the next piece of information. “AI can’t just answer, it has to do the research first to determine which answer is best.”

For mission-critical answers like health advice, checking multiple resources and known sources of truth is probably the way forward, suggests Nvidia's CEO. Of course, this means that the generator creating the answer should have the option to say “I don't know the answer to your question” or “I can't reach a consensus on what the correct answer is” means. “This question is,” or even something like, “The Super Bowl hasn't happened yet, so we don't know who won.”